Table of Contents

Likelihood of discontinuous progress around the development of AGI

Published 23 February, 2018; last updated 26 May, 2020

We do not know of seemingly compelling arguments to expect large discontinuity in AI progress above the extremely low base rate for all technologies. However this topic is controversial, and many thinkers on the topic disagree with us, so we consider this an open question.

Details

Definitions

We say a technological discontinuity has occurred when a particular technological advance pushes some progress metric substantially above what would be expected based on extrapolating past progress. We measure the size of a discontinuity in terms of how many years of past progress would have been needed to produce the same improvement. We use judgment to decide how to extrapolate past progress.

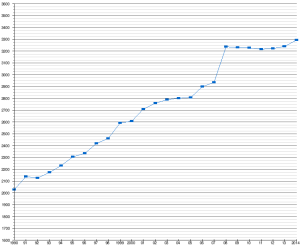

For instance, in the following trend of progress in chess AI performance, we would say that there was a discontinuity in 2007, and it represented a bit over five years of progress at previous rates.

Relevance

Discontinuity by some measure, on the path to AGI, lends itself to:

- A party gaining decisive strategic advantage

- A single important ‘deployment’ event

- Other very sudden and surprising events

Arguably, the first two require some large discontinuity. Thus the importance of planning for those outcomes rests on the likelihood of a discontinuity.

Outline

We investigate this topic in two parts. First, with no particular knowledge of AGI as a technology, how likely should we expect a particular discontinuity to be? We take the answer to be quite low. Second, we review arguments that AGI is different from other technologies, and lends itself to discontinuity. We currently find these arguments uncompelling, but not decisively so.

Default chance of large technological discontinuity

Discontinuities larger than around ten years of past progress in one advance seem to be rare in technological progress on natural and desirable metrics.1 We have verified around five examples, and know of several other likely cases, though have not completed this investigation.

This does not include the discontinuities when metrics initially go from zero to a positive number. For instance, the metric ‘number of Xootr scooters in the world’ presumably went from zero to one on the first day of production, though this metric had seen no progress before. So on our measure of discontinuity size, this was infinitely many years of progress in one step. It is rarer for a broader metric (e.g. ‘scooters’) to go from zero to one, but it must still happen occasionally. We do not mean to ignore this type of phenomenon, but just to deal with it separately, since discontinuity in an ongoing progress curve seems to be quite rare, whereas the beginning of positive progress on a metric presumably occurs once for every metric that begins at zero.

Proposed reasons to expect a discontinuity near AGI

We have said that the base rate of large technological discontinuities appears to be low. We now review and examine arguments that AGI is unusually likely to either produce a discontinuity, or follow one.

Hominid variation

Argument

Humans are vastly more successful in certain ways than other hominids, yet in evolutionary time, the distance between them is small. This suggests that evolution induced discontinuous progress in returns, if not in intelligence itself, somewhere approaching human-level intelligence. If evolution experienced this, this suggests that artificial intelligence research may do also.

Counterarguments

Evolution does not appear to have been optimizing for the activities that humans are good at. In terms of what evolution was optimizing for—short term ability to physically and socially prosper—it is quite unclear that humans are disproportionately better than earlier hominids, compared to differences between earlier hominids and less optimized animals.

This counterargument implies that if one were to optimize for human-like success that it would be possible (and likely at some point) to make agents with brains the size of chimps, that were humanlike but somewhat worse in their capabilities.

It is also unclear to us that humans succeed so well on something like ‘intelligence’ from vastly superior individual intelligence, rather than vastly superior group intelligence, via innovations such as language.

Status

See status section of ‘brain scaling’ argument—they are sufficiently related to be combined.

Brain scaling

Argument

If we speculate that the main relevant difference between us and our hominid relatives is brain size, then we can argue that brain size in particular appears to produce very fast increase in impressiveness. So if we develop AI systems with certain performance, we should expect to make disproportionately better systems by using more hardware.

Variation in brain size among humans also appears to be correlated with intelligence, and differences in intelligence in the human range anecdotally correspond to large differences in some important capabilities, such as ability to develop new physics theories. So if the relatively minor differences in human brain size cause the differences in intelligence, this would suggest that larger differences in ‘brain size’ possible with computing hardware could lead to quite large differences in some important skills, such as ability to successfully theorize about the world.

Counterarguments

This is closely related to the ‘hominids saw discontinuous progress from intelligence’ argument (see above), so shares the counterargument that evolution does not appear to have been optimizing for those things that humans excel at.

Furthermore, if using more hardware generally gets outsized gains, one might expect that by the time we have any particular level of AGI performance, we are already using extremely large amounts of hardware. If this is a cheap axis on which to do better, it would be surprising if we left that value on the table until the end. So the only reason to expect a discontinuity here would seem to be if the opportunity to use more hardware and get outsized results started existing suddenly. We know of no particular reason to expect this, unless there was a discontinuity in something else, for instance if there was indeed one algorithm. That type of discontinuity is discussed elsewhere.

Status

This argument seems weak to us currently, but further research could resolve these questions in directions that would make it compelling:

- Are individual humans radically superior to apes on particular measures of cognitive ability? What are those measures, and how plausible is it that evolution was (perhaps indirectly) optimizing for them?

- How likely is improvement in individual cognitive ability to account for humans’ radical success over apes? (For instance, compared to new ability to share innovations across the population)

- How does human brain size relate to intelligence or particular important skills, quantitatively?

Intelligence explosion

Argument

Intelligence works to improve many things, but the main source of intelligence—human brains—is arguably not currently improved substantially itself. The development of artificial intelligence will change that, by allowing the most intelligent entities to directly contribute to their increased intelligence. This will create a feedback loop where each step of progress in artificial intelligence makes the next steps easier and so faster. Such a feedback loop could potentially go fast. This would not strictly be a discontinuity, in that each step would only be a little faster than the last, however if the growth rate became sufficiently fast sufficiently quickly, it would be much like a discontinuity from our perspective.

This feedback loop is usually imagined to kick in after AI was ‘human-level’ on at least some tasks (such as AI development), but that may be well before it is recognized as human-level in general.

Counterarguments

Positive feedback loops are common in the world, and very rarely move fast enough and far enough to become a dominant dynamic in the world. So we need strong reason to expect an intelligence-of-AGI feedback loop to be exceptional, beyond the observation of a potential feedback effect. We do not know of such arguments. However, this is a widely discussed topic, so contenders probably exist.

Status

The counterargument currently seems to carry to us. However, we think the argument could be strong if:

- Strong reason is found to expect an unusually fast and persistent feedback loop

- Quantitative models of current relationships between the variables hypothesized to form a feedback loop suggest very fast intelligence growth.

One algorithm

Argument

If a technology is simple, we might expect to discover it in a small number of steps, perhaps one. If it is also valuable, we might expect each of those those steps to represent huge progress in some interesting metric. The argument here is that intelligence—or something close enough to what we mean by intelligence—is like this.

Some reasons to suspect that intelligence is conceptually simple:

- Evolution discovered it relatively quickly (though see earlier sections on hominid evolution and brain size)

- It seems conceivable to some that the mental moves required to play Go are basically all the ones you need for a large class of intellectual activities, at least with the addition of some way to navigate the large number of different contexts that are about that hard to think about.

- It seems plausible that the deep learning techniques we have are already such a thing, and we just need more hardware and adjustment to make it work.

We expect that this list is missing some motivations for this view.

This argument can go in two ways. If we do not have the one algorithm yet, then we might expect that when we find it there will be a discontinuity. If we already have it, yet don’t have AGI, then what remains to be done is probably accruing sufficient hardware to run it. This might traditionally be expected to lead to continuous progress, but it is also sometimes argued to lead to discontinuous progress. Discontinuity in hardware is discussed in a forthcoming section, so here we focus on discontinuity from acquiring the insight.

Counterarguments

Society has invented many simple things before. So even if they are unusually likely to produce discontinuities, the likelihood would still seem to be low, since discontinuities are rare. To the extent that you believe that society has not invented such simple things before, you need a stronger reason to expect that intelligence is such a thing, and we do not know of strong reasons.

Status

We currently find this argument weak, but would find it compelling if:

- Strong reason emerged to expect intelligence to be a particularly simple innovation, among all innovations, and

- We learned that very simple innovations do tend to cause discontinuities, and we have merely failed to properly explore possible past discontinuities well.

Deployment scaling

Argument

Having put in the research effort to develop one advanced AI system, it is very little further effort to make a large number of them. Thus at the point that a single AGI system is developed, there could shortly thereafter be a huge number of AGI systems. This would seem to represent a discontinuity in something like global intelligence, and also in ‘global intelligence outside of human brains’.

Counterarguments

It is the nature of almost every product that large quantities of upfront effort are required to build a single unit, at which point many units can be made more cheaply. This is especially true of other software products, where a single unit can be copied without the need to build production facilities. So this is far from a unique feature of AGI, and if it produced discontinuities, we would see them everywhere.

The reason that the ability to quickly scale the number of instances of a product does not generally lead to a discontinuity seems to be that before the item’s development, there was usually another item that was slightly worse, which there were also many copies of. For instance, if we write a new audio player, and it works well, it can be scaled up across many machines. However on each machine it is only replacing a slightly inferior audio player. Furthermore, because everyone already has a slightly inferior audio player, adoption is gradual.

If we developed human-level AGI software right now, it would be much better than what computing hardware is already being used for, and because of that and other considerations, it may spread to a lot of hardware fast. So we would expect a discontinuity. However if we developed human-level AGI software now, this would already be a discontinuity in other metrics. In general, it seems to us that this scaling ability may amplify the effects of existing discontinuities, but it is hard to see how it could introduce one.

Status

This argument seems to us to rest on a confusion. We do not think it holds unless there is already some other large discontinuity.

Train vs. test

Argument

There are at least three different processes you can think of computation being used for in the development of AGI:

- Searching the space of possible software for AGI designs

- Training specific AGI systems

- Running a specific AGI system

We tend to think of the first requiring much more computation than the second, which requires much more computation than the third. This suggests that when we succeed at the first, hardware will have to be so readily available that we can quickly do a huge amount of training, and having done the training, we can run vast numbers of AI systems. This seems to suggest a discontinuity in number of well-trained instances of AGI systems.

Counterarguments

This seems to be a more specific version of the deployment scaling argument above, and so seems to fall prey to the same counterargument: that whenever you find a good AGI design and scale up the number of systems running it, you are only replacing somewhat worse software, so this is not a discontinuity in any metric of high level capability. For there to be a discontinuity, it seems we would need a case where step 1 returns an AGI design that is much better than usual, or returns a first AGI design that is already very powerful compared to other ways of solving the high level problems that it is directed at. These sources of discontinuity are both discussed elsewhere.

Status

This seems to be a variant of the deployment scaling argument, and so similarly weak. However it is more plausible to us here that we have failed to understand some stronger form of the argument, that others find compelling.

Starting high

Argument

As discussed in Default chance of technological discontinuity above, the first item in a trend always represents a ‘large’ discontinuity in the number of such items—the number has been zero forever, and now it is one, which is more progress at once than in all of history. When we said that discontinuities were rare, we explicitly excluded discontinuities such as these, which appear to be common but uninteresting. So perhaps a natural place to find an AGI-related discontinuity is here.

Discontinuity from zero to one in the number of AGI systems would not be interesting on its own. However perhaps there are other metrics where AGI will represent the first step of progress. For instance, the first roller coaster caused ‘number of roller coasters’ to go from zero to one, but also ‘most joy caused by a roller coaster’ to go from zero to some positive number. And once we admit that inventing some new technology T could cause some initial discontinuity in more interesting trends than ‘number of units of T’, we do not know very much about where or how large such first steps may be (since we were ignoring such discontinuities). So the suggestion is that AGI might see something like this: important metrics going from zero to high numbers, where ‘high’ is relative to social impact (our previous measure of discontinuities being unhelpful in the case where progress was previously flat). Call this ‘starting high’.

Having escaped the presumption of a generally low base rate of interesting discontinuities then, there remain the questions of what the base rate should be for new technologies ‘starting high’ on interesting metrics, and whether we should expect AGI in particular to see this even above the new base rate.

One argument that the base rate should be high is that new technologies have a plethora of characteristics, and empirically some of them seem to start ‘high’, in an intuitive sense. For instance, even if the first plane did not go very far, or fast, or high, it did start out 40 foot wide—we didn’t go through flying machines that were only an atom’s breadth, or only slightly larger than albatrosses. ‘Flying machine breadth’ is not a very socially impactful metric, but if non-socially impactful metrics can start high, then why not think that socially impactful metrics can?

Either way, we might then argue that AGI is especially likely to produce high starting points on socially impactful metrics:

- AGI will be the first entry in an especially novel class, so it is unusually likely to begin progress on metrics we haven’t seen progress on before.

- Fully developed AGI would represent progress far above our current capabilities on a variety of metrics. If fully developed AGI is unusually impactful, this suggests that the minimal functional AGI is also unusually impactful.

- AGI is unusually non-amenable to coming in functional yet low quality varieties. (We do not understand the basis for this argument well enough to relay it. One possibility is that the insights involved in AGI are sufficiently few or simple that having a partial version naturally gets you a fully functional version quickly. This is discussed in another section. Another is that AGI development will see continuous progress in some sense, but go through non-useful precursors—much like ‘half of a word processor’ is not a useful precursor to a word processor. This is also discussed separately elsewhere.)

Counterarguments

While it is true that new technologies sometimes represent discontinuities in metrics other than ‘number of X’, these seem very unlikely to be important metrics. If they were important, there would generally be some broader metric that had previously been measured that would also be discontinuously improved. For instance, it is hard to imagine AGI suddenly possessing some new trait Z to a degree that would revolutionize the economy, without this producing discontinuous change in previously measured things like ‘ability to turn resources into financial profit’.

Which is to say, it is not a coincidence that the examples of high starting points we can think of tend to be unimportant. If they were important, it would be strange if we had not previously made any progress on achieving similar good outcomes in other ways, and if the new technology didn’t produce a discontinuity in any such broader goal metrics, then it would not be interesting. (However it could still be the case that we have so far systematically failed to look properly at very broad metrics as they are affected by fairly unusual new technologies, in our search for discontinuities.)

We know of no general trend where technologies that are very important when well developed cause larger discontinuities when they are first begun. We have also not searched for this specifically, but isn’t an immediately apparent trend in the discontinuities we know of.2

Supposing that an ideal AGI is much smarter than a human, then humans clearly demonstrate the possibility of functional lesser AGIs. (Which is not to say that ‘via human-like deviations from perfection’ is a particularly likely trajectory.) Humans also seem to demonstrate the possibility of developing human-level intelligence, then not immediately tending to have far superior intelligence. This is usually explained by hardware limitations, but seems worth noting nonetheless.

Status

We currently think it is unlikely that any technology ‘starts high’ on any interesting metric, and either way AGI does not seem especially likely to do so. We would find this argument more compelling if:

- We were shown to be confused about the inference that ‘starting high’ on an interesting metric should necessarily produce discontinuity in broader, previously improved metrics. For instance, if a counterexample were found.

- Historical data was found to suggest that ultimately valuable technologies tended to ‘start high’ or produce discontinuities.

- Further arguments emerged to expect AGI to be especially likely to ‘start high’.

Awesome AlphaZero

Argument

We have heard the claim that AlphaZero represented a discontinuity, at least in the ability to play a variety of games using the same system, and maybe on other metrics. If so, this would suggest that similar technologies were unusually likely to produce discontinuities.

Counterarguments

Supposing that AlphaZero did represent discontinuity on playing multiple games using the same system, there remains a question of whether that is a metric of sufficient interest to anyone that effort has been put into it. We have not investigated this.

Whether or not this case represents a large discontinuity, if it is the only one among recent progress on a large number of fronts, it is not clear that this raises the expectation of discontinuities in AI very much, and in particular does not seem to suggest discontinuity should be expected in any other specific place.

Status

We have not investigated the claims this argument is premised on, or examined other AI progress especially closely for discontinuities. If it turned out that:—

- Recent AI progress represented at least one substantial discontinuity

- The relevant metric had been a focus of some prior effort

—then we would consider more discontinuities around AGI more likely. Beyond raising the observed base rate of such phenomena, this would also suggest to us that the popular expectation of AI discontinuity is well founded, even if we have failed to find good explicit arguments for it. So this may raise our expectation of discontinuous change more than the change in base rate would suggest.

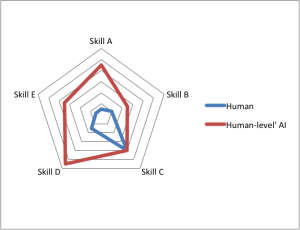

Uneven skills

Argument

There are many axes of ability, and to replace a human at a particular task, a system needs to have a minimal ability on all of them. At which point, it should be substantially superhuman on some axes. For instance, by the time that self-driving cars are safe enough in all circumstances to be allowed to drive, they might be very much better than humans at noticing bicycles. So there might be a discontinuity in bicycle safety at the point that self-driving cars become common.

If this were right, it would also suggest a similar effect near overall human-level AGI: that the first time we can automate a variety of tasks as well as a human, we will be able to automate them much better than a human on some axes. So those axes may see discontinuity.

Counterarguments

This phenomenon appears to be weakly related to AGI in particular, and should show up whenever one type of process replaces another one. Replacement of one type of system with another type does not seem very rare. So if it is true that large discontinuities are rare, then this type of dynamic does not produce large discontinuities often.

This argument benefits from the assumption that you have to ‘replace’ a human in one go, which seems usually false. New technology can usually be used to complement humans, admittedly with inefficiency relative to a more integrated system. For instance, one reason there will not be a discontinuity in navigation ability of cars on the road is that humans already use computers for their navigation. However this counterargument doesn’t apply to all axes. For instance, if computers operate quite fast but cannot drive safely, you can’t lend their speed to humans and somehow still use the human steering faculties.

Status

This argument seems successfully countered, unless:

- We have missed some trend of discontinuities of this type elsewhere. If there were such a trend, it seems possible that we would have systematically failed to notice them because they look somewhat like ‘initial entry goes from zero to one’, or are in hard to measure metrics.

- There are further reasons to expect this phenomenon to apply in the AGI case specifically, especially without being substantially mitigated by human-complementary technologies

Payoff thresholds

Argument

Even if we expect progress on technical axes to change continuously, there seems no reason this shouldn’t translate to discontinuous change in a related axis such as ‘economic value’ or ‘ability to take over the world’. For instance, continuous improvement in boat technology might still lead to a threshold in whether or not you can reach the next island.

Counterarguments

There actually seems to be a stronger theoretical reason to expect continuous progress in metrics that we care about directly, such as economic value, than on more technical metrics. This is that if making particular progress is expected to bring outsized gains, more effort should be directed toward the project until that effort has offset a large part of the gains. For instance, suppose that for some reason guns were a hundred times more useful if they could shoot bullets at just over 500m/s than just under, and otherwise increasing speed was slightly useful. Then if you can make a 500m/s gun, you can earn a hundred times more for it than a lesser gun. So if guns can currently shoot 100m/s, gun manufacturers will be willing to put effort into developing the 500m/s one up to the point that it is costing nearly a hundred times more to produce it. Then if they manage to produce it, they do not see a huge jump in value of making guns, and the buyer doesn’t see a huge jump in value of gun – their gun is much better but also much more expensive. However at the same time, there may have been a large jump in ‘speed of gun’.

This is a theoretical argument, and we do not know how well supported it is. (It appears to be at least supported by the case of nuclear weapons, which were discontinuous in the technical metric ‘explosive power per unit mass’, but not obviously so in terms of cost-effectiveness).

In practice, we don’t know of many large discontinuities in metrics of either technical performance or things that we care about more directly.

Even if discontinuities in value metrics were common, this argument would need to be paired with another for why AGI in particular should be expected to bring about a large discontinuity.

Status

This argument currently seems weak. In the surprising event that that discontinuities are actually common in metrics more closely related to value (rather than generally rare, and especially so that case), the argument would only be mildly stronger, since it doesn’t explain why AGI is especially susceptible to this.

Human-competition threshold

Argument

There need not be any threshold in nature that makes a particular level of intelligence much better. In a world dominated by humans, going from being not quite competitive with humans to slightly better could represent a step change in value generated.

This is a variant of the above argument from payoff thresholds that explains why AGI is especially likely to see discontinuity, even if it is otherwise rare: it is not often that something as ubiquitous and important as humans is overtaken by another technology.

Counterarguments

Humans have a variety of levels of skill, so even ‘competing with humans’ is not a threshold. You might think that humans are in some real sense very close to one another, but this seems unlikely to us.

Even competing with a particular human is unlikely to have threshold behavior—without for instance a requirement that someone hire one or the other—because there are a variety of tasks, and the human-vs.-machine differential will vary by task.

Status

This seems weak. We would reconsider if further evidence suggested that the human range is very narrow, in some ubiquitously important dimension of cognitive ability.

Notes

- We expect discontinuities can be found in the following areas, but these do not seem interesting:

- convoluted metrics designed to capture discontinuities, and not likely to be considered a natural measure of success

- metrics that with additional details that aren’t necessarily desirable, e.g. we expect ‘Xootr scooter miles/dollar’ to be more discontinuous than ‘scooter miles/dollar’, and for that to be more discontinuous than ‘transport miles/dollar’. Similarly, the day an update to OSX is launched, the number of users of that particular operating system presumably grows rapidly, but the number of users of operating systems does not, and nor does any natural operating system performance metric that we know of.

- metrics that nobody is trying to perform well on e.g. number of eight-foot tall unicorns made of marshmallow might undergo a sudden increase if some particular rich person becomes interested. This is uninteresting to us in part because it doesn’t represent a change in the underlying technology, and in part because by its nature if anybody cared how many such unicorns there were the metric wouldn’t have been discontinuous.