Table of Contents

MIRI AI Predictions Dataset

Published 20 May, 2015; last updated 10 December, 2020

The MIRI AI predictions dataset is a collection of public predictions about human-level AI timelines. We edited the original dataset, as described below. Our dataset is available here, and the original here.

Interesting features of the dataset include:

- The median dates at which people’s predictions suggest AI is less likely than not and more likely than not are 2033 and 2037 respectively.

- Predictions made before 2000 and after 2000 are distributed similarly, in terms of time remaining when the prediction is made

- Six predictions made before 1980 were probably systematically sooner than predictions made later.

- AGI researchers appear to be more optimistic than AI researchers.

- People predicting AI in public statements (in the MIRI dataset) predict earlier dates than demographically similar survey takers do.

- Age and predicted time to AI are almost entirely uncorrelated: r = -.017.

Details

History of the dataset

We got the original MIRI dataset from here. According to the accompanying post, the Machine Intelligence Research Institute (MIRI) commissioned Jonathan Wang and Brian Potter to gather the data. Kaj Sotala and Stuart Armstrong analyzed and categorized it (their categories are available in both versions of the dataset). It was used in the papers Armstrong and Sotala 2012 and Armstrong and Sotala 2014. We modified the dataset, as described below. Our version is here.

Our changes to the dataset

These are changes we made to the dataset:

- There were a few instances of summary results from large surveys included as single predictions – we removed these because survey medians and individual public predictions seem to us sufficiently different to warrant considering separately.

- We removed entries which appeared to be duplications of the same data, from different sources.

- We removed predictions made by the same individual within less than ten years.

- We removed some data which appeared to have been collected in a biased fashion, where we could not correct the bias.

- We removed some entries that did not seem to be predictions about general artificial intelligence

- We may have removed some entries for other similar reasons

- We added some predictions we knew of which were not in the data.

- We fixed some small typographic errors.

Deleted entries can be seen in the last sheet of our version of the dataset. Most have explanations in one of the last few columns.

We continue to change the dataset as we find predictions it is missing, or errors in it. The current dataset may not exactly match the descriptions on this page.

How did our changes matter?

Implications of the above changes:

- The dataset originally had 95 predictions; our version has 65 at last count.

- Armstrong and Sotala transformed each statement into a ‘median’ prediction. In the original dataset, the mean ‘median’ was 2040 and the median ‘median’ 2030. After our changes, the mean ‘median’ is 2046 and the median ‘median’ remains at 2030. The means are highly influenced by extreme outliers.

- We have not evaluated Armstrong and Sotala’s findings in the updated dataset. One reason is that their findings are mostly qualitative. For instance, it is a matter of judgment whether there is still ‘a visible difference’ between expert and non-expert performance. Our judgment may differ from those authors anyway, so it would be unclear whether the change in data changed their findings. We address some of the same questions by different methods.

minPY and maxIY predictions

People say many slightly different things about when human-level AI will arrive. We interpreted predictions into a common format: one or both of a claim about when human-level AI would be less likely than not, and a claim about when human-level AI would be more likely than not. Most people didn’t explicitly use such language, so we interpreted things roughly, as closely as we could. For instance, if someone said ‘AI will not be here by 2080’ we would interpret this as AI being less likely to exist than not by that date.

Throughout this page, we use ‘minimum probable year’ (minPY) to refer to the minimum time when a person is interpreted as stating that AI is more likely than not. We use ‘maximum improbable year’ (maxIY) to refer to the maximum time when a person is interpreted as stating that AI is less likely than not. To be clear, these are not necessarily the earliest and latest times that a person holds the requisite belief – just the earliest and latest times that is implied by their statement. For instance, if a person says ‘I disagree that we will have human-level AI in 2050’, then we interpret this as a maxIY prediction of 2050, though they may well also believe AI is less likely than not in 2065 also. We would not interpret this statement as implying any minPY. We interpreted predictions like ‘AI will arrive in about 2045’ as 2045 being the date at which AI would become more likely than not, so both minPY and a maxIY of 2045.

This is different to the ‘median’ interpretation Armstrong and Sotala provided. Which is not necessarily to disagree with their measure: as Armstrong points out, it is useful to have independent interpretations of the predictions. Both our measure and theirs could mislead in different circumstances. People who say ‘AI will come in about 100 years’ and ‘AI will come within about 100 years’ probably don’t mean to point to estimates 50 years apart (as they might be seen to in Armstrong and Sotala’s measure). On the other hand, if a person says ‘AI will obviously exist before 3000AD’ we will record it as ‘AI is more likely than not from 3000AD’ and it may be easy to forget that in the context this was far from the earliest date at which they thought AI was more likely than not.

| Original A&S ‘median’ | Updated A&S ‘median’ | minPY | maxIY | |

| Mean | 2040 | 2046 | 2067 | 2067 |

| Median | 2030 | 2030 | 2037 | 2033 |

Table 1: Summary of mean and median AI predictions under different interpretations

As shown in Table 1, our median dates are a few years later than Armstrong & Sotala’s original or updated dates, and only four years from one another.

Categories used in our analysis

Timing

‘Early’ throughout refers to before 2000. ‘Late’ refers to 2000 onwards. We split the predictions in this way because often we are interested in recent predictions, and 2000 is a relatively natural recent cutoff. We chose this date without conscious attention to the data beyond the fact that there have been plenty of predictions since 2000.

Expertise

We categorized people as ‘AGI’, ‘AI’, ‘futurist’ and ‘other’ as best we could, according to their apparent research areas and activities. These are ambiguous categories, but the ends to which we put such categorization do not require that they be very precise.

Findings

Basic statistics

The median minPY is 2037 and median maxIY is 2033 (see ‘Basic statistics’ sheet). The mean minPY is 2067, which is the same as the mean maxIY (see ‘Basic statistics’ sheet). These means are fairly meaningless, as they are influenced greatly by a few extreme outliers. Figure 1 shows the distribution of most of the predictions.

The following figures shows the fraction of predictors over time who claimed that human-level AI is more likely to have arrived by that time than not (i.e. minPY predictions). The first is for all predictions, and the second for predictions since 2000. The first graph is hard to meaningfully interpret, because the predictions were made in very different volumes at very different times. For instance, the small bump on the left is from a small number of early predictions. However it gives a rough picture of the data.

Remember that these are dates from which people claimed something like AI being more likely than not. Such dates are influenced not only by what people believe, but also by what they are asked. If a person believes that AI is more likely than not by 2020, and they are asked ‘will there be AI in 2060’ they will respond ‘yes’ and this will be recorded as a prediction of AI being more likely than not after 2060. The graph is thus an upper bound for when people predict AI is more likely than not. That is, the graph of when people really predict AI with 50 percent confidence keeps somewhere to the left of the one in figures 2 and 3.

Similarity of predictions over time

In general, early and late predictions are distributed fairly similarly over the years following them. For minPY predictions, the correlation between the date of a prediction and number of years until AI is predicted from that time is 0.13 (see ‘Basic statistics’ sheet). Figure 5 shows the cumulative probability of AI being predicted over time, by late and early predictors. At a glance, they are surprisingly similar. The largest difference between the fraction of early and of late people who predict AI by any given distance in the future is about 15% (see ‘Predictions over time 2’ sheet). A difference this large is fairly likely by chance. However most of the predictions were made within twenty years of one another, so it is not surprising if they are similar.

The six very early predictions do seem to be unusually optimistic. They are all below the median 30 years, which would have a 1.6% probability of occurring by chance.

Figures 4-7 illustrate the same data in different formats.

Groups of participants

Associations with expertise and enthusiasm

= Summary =

AGI people in this dataset are generally substantially more optimistic than AI people. Among the small number of futurists and others, futurists were optimistic about timing, and others were pessimistic.

= Details =

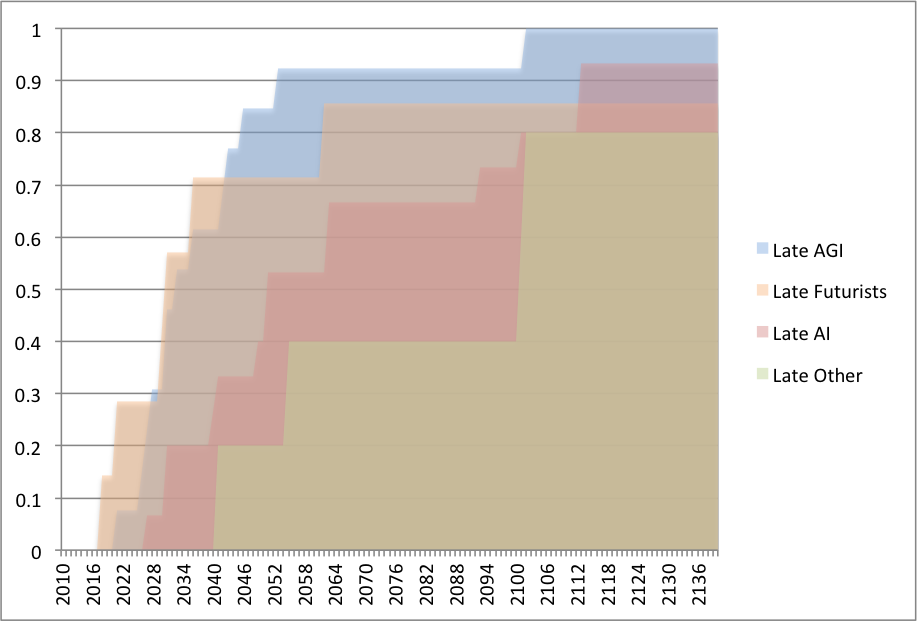

We classified the predictors as AGI researchers, (other) AI researchers, Futurists and Other, and calculated CDFs of their minPY predictions, both for early and late predictors. The figures below show a selection of these. Recall that ‘early’ and ‘late’ correspond to before and after 2000.

As we can see in figure 8, Late AGI predictors are substantially more optimistic than late AI predictors: for almost any date this century, at least 20% more AGI people predict AI by then. The median late AI researcher minPY is 18 years later than the median AGI researcher minPY. We haven’t checked whether this is partly caused by predictions by AGI researchers having been made earlier.

There were only 6 late futurists, and 6 late ‘other’ (compared to 13 and 16 late AGI and late AI respectively), so the data for these groups is fairly noisy. Roughly, late futurists in the sample were more optimistic than anyone, while late ‘other’ were more pessimistic than anyone.

There were no early AGI people, and only three early ‘other’. Among seven early AI and eight early futurists, the AI people predicted AI much earlier (70% of early AI people predict AI before any early futurists do), but this seems to be at least partly explained by the early AI people being concentrated very early, and people predicting AI similar distances in the future throughout time.

| Median minPY predictions | AGI | AI | Futurist | Other | All |

| Early (warning: noisy) | – | 1988 | 2031 | 2036 | 2024 |

| Late | 2033 | 2051 | 2030 | 2101 | 2042 |

Table 2: Median minPY predictions for all groups, late and early. There were no early AGI predictors.

Statement makers and survey takers

= Summary =

Surveys seem to produce later median estimates than similar individuals making public statements do. We compared some of the surveys we know of to the demographically similar predictors in the MIRI dataset. We expected these to differ because predictors in the MIRI dataset are mostly choosing to making public statements, while survey takers are being asked, relatively anonymously, for their opinions. Surveys seem to produce median dates on the order of a decade later than statements made by similar groups.

= Details =

We expect surveys and voluntary statements to be subject to different selection biases. In particular, we expect surveys to represent a more even sample of opinion, while voluntary statements to be more strongly concentrated among people with exciting things to say or strong agendas. To learn about the difference between these groups, and thus the extent of any such bias, we below compare median predictions made in surveys to median predictions made by people from similar groups in voluntary statements.

Note that this is rough: categorizing people is hard, and we have not investigated the participants in these surveys more than cursorily. There are very few ‘other’ predictors in the MIRI dataset. The results in this section are intended to provide a ballpark estimate only.

Also note that while both sets of predictions are minPYs, the survey dates are often the actual median year that a person expects AI, whereas the statements could often be later years which the person happens to be talking about.

| Survey | Primary participants | Median minPY prediction in comparable statements in the MIRI data | Median in survey | Difference |

| Kruel (AI researchers) | AI | 2051 | 2062 | +11 |

| Kruel (AGI researchers) | AGI | 2033 | 2031 | -2 |

| AGI-09 | AGI | 2033 | 2040 | +7 |

| FHI | AGI/other | 2033-2062 | 2050 | in range |

| Klein | Other/futurist | 2030-2062 | 2050 | in range |

| AI@50 | AI/Other | 2051-2062 | 2056 | in range |

| Bainbridge | Other | 2062 | 2085 | +23 |

Table 3: median predictions in surveys and statements from demographically similar groups.

Note that the Kruel interviews are somewhere between statements and surveys, and are included in both data.

It appears that the surveys give somewhat later dates than similar groups of people making statements voluntarily. Around half of the surveys give later answers than expected, and the other half are roughly as expected. The difference seems to be on the order of a decade. This is what one might naively expect in the presence of a bias from people advertising their more surprising views.

Relation of predictions and lifespan

Age and predicted time to AI are very weakly anti-correlated: r = -.017 (see Basic statistics sheet, “correlation of age and time to prediction”). This is evidence against a posited bias to predict AI within your existing lifespan, known as the Maes-Garreau Law.