uncategorized:capabilities_of_sota_ai

Table of Contents

Capabilities of state-of-the-art AI, 2024

This is a list of some noteworthy capabilities of current state-of-the-art AI in various categories. Last updated 1/24/2024

Games

- In 2017, AlphaZero learned to play Chess, Shogi, and Go better than the best human players after less than 8 hours of training each, starting with no domain knowledge or examples of human play.1)

- In 2020, MuZero also learned to play Chess, Shogi, and Go better than the best human players with no domain knowledge or examples of human play, and additionally without initially knowing the rules of the games.2)

- In 2022, machine learning was used to discover adversarial strategies that humans can use to defeat the best Go AI3)

- In 2020, Agent57 learned to play all 57 Atari games above average human level.4)

- In 2021, EfficientZero, a variation of MuZero, learned to play 26 Atari games at 109% of median human performance after only 2 hours of gameplay.5)

- In 2019, Pluribus won a game of Texas Hold’Em against 5 professional poker players. Pluribus was trained using self-play, starting with no human data or domain knowledge, and using the equivalent of $150 in compute.6)

- In 2019, OpenAI Five defeated a professional Dota 2 team twice in 2019.7)

- In 2019, OpenAI Five defeated 99.4% of Dota 2 players in public matches.8)

- In 2019, AlphaStar reached Grandmaster level in Starcraft, playing with the same constraints as a human player (viewing the world through a camera, restricted clickrate).9)

- DreamerV3 is a general algorithm from 2023 that can learn to play a variety of games without human data, and is able to collect diamonds in Minecraft.10)

- In 2022, DeepNash won 84% of Stratego games against the top expert human players on Gravon games.11)

- CICERO, from 2022, can play Diplomacy, a game that involves communicating and coordinating with other players. Cicero ranked in the top 10% of players who had played more than one game on webDiplomacy.net.12)

Examples and discussion of Diplomacy gameplay with Cicero

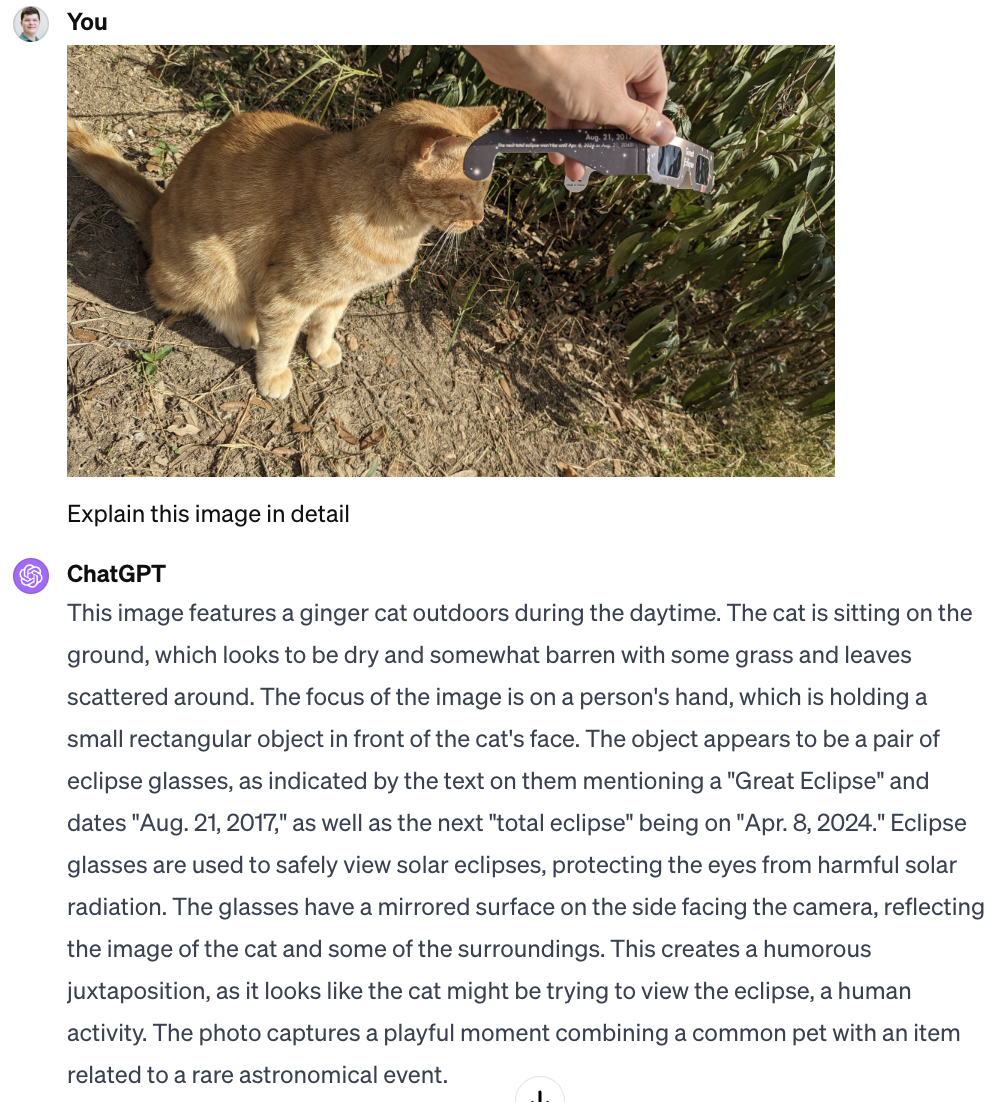

Language

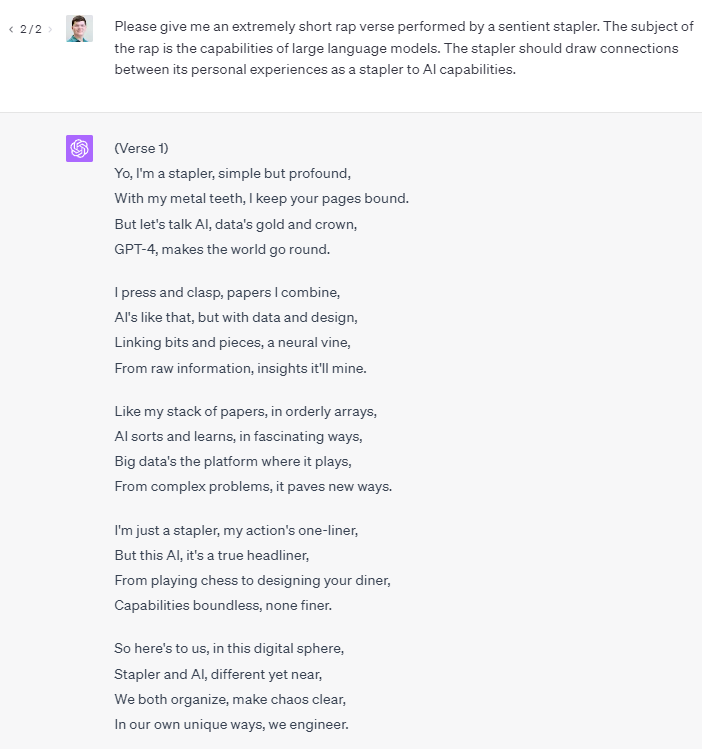

- GPT-4, a large language model from 2023, can write poetry, answer questions, reason about the world, have conversations, act out characters, and more.

- Large language models such as GPT-4 can also write code. GPT-4 correctly solved programming problems in the HumanEval dataset 67% of the time.

- GPT-4 achieved human-level performance on various professional and academic exams, including SATs, AP exams, and the Uniform Bar Exam.

- GPT-4 correctly answered 92% of the questions in GMS8K, a dataset of elementary school level math word problems.

- Unlike other large language models, GPT-4 can accept text and images as input.13)

- PaLM, a language model from 2022, surpassed average human performance on BIG-bench, “a collaborative benchmark aimed at producing challenging tasks for large language models.”14)

- As of 2020, Google Translate supported over 100 languages. When translating from other languages into English, its translations received BLEU scores ranging from around 0.15 to 0.53, depending on the language. BLEU score is based on the similarity of a translation to one created by a human translator, and ranges from 0 to 1, where a score of 1 indicates output identical to a human translator.15)

- Elicit is an AI research assistant from 2022 that, given a research question, can find relevant papers and summarize the findings of the top four papers.16)

- In 2022, Ithaca restored the missing text in ancient Greek inscriptions with 62% accuracy.17)

- In 2022, an AI system passed the human baseline for performance in natural language inference, a task that involves determining whether a hypothesis is true, false, or undetermined based on presented premises.18)

Images

- GPT-4 can recognize and interpret the content of images. 19)

- Sensetime is a facial recognition system from 2014 that surpassed average human performance in accurately labeling faces in a large dataset of images.20)

- LipNet is a lip-reading system from 2016 that reached superhuman performance in lip-reading.21)

- AI systems created by Google have reached human-level performance in some tasks related to analyzing medical imaging.22)

- CLIP, from 2021, can create a text description of an image.23)

- DALL-E 3, from 2023, can generate novel images in many styles, given a text description from the user. It can occasionally produce coherent text within images. 24)

DALL-E 3 output with the prompt “A photo of a painted mural on the side of a building depicting an AI strategy researcher getting distracted from his work by extremely interesting artwork on his laptop screen. A thought bubble above his head says 'Wow, generative AI is quickly improving!'” For a subscription fee, DALL-E 3 is available to use at chat.openAI.com

- Muse can also generate images from text descriptions, more efficiently than some other models such as DALL-E 2.25)

- DeepFaceLab, from 2020, can swap a face in a video with another person’s face (“deepfakes”).26)

- Generative adversarial networks such as StyleGAN2, from 2019, can be trained to create realistic images of something within a certain category, such as human faces.27)

- An AI system from 2023 can convincingly copy someone's handwriting after seeing only a few paragraphs of example text.28)

- AI systems such as VideoPoet, from 2023, can generate short videos given a text description.29)

A movie composed of several individual video clips produced by VideoPoet

Audio

- Many models can read text with a human-like voice. Tacotron 2, from 2017, generated voice samples that received a mean opinion score of 4.53/5 compared to 4.58/5 for professionally recorded human speech.30)

- Automatic speech recognition systems can transcribe recordings of human speech. Whisper, from 2022, is able to transcribe recordings with an accuracy close to that of professional human transcribers.31)

- AudioLM, from 2022, creates predicted “continuations” of an audio input.32)

- Models such as Deep Voice 3, from 2018, can imitate a human voice based on a few samples of recorded speech.33)

- Recent models such as Koe can take a recorded voice sample and change it into another voice.34)

- Suno.ai, from 2023, can create songs with lyrics and instrumentation based on a text description of the song's style and subject. 35)

Output from Suno.AI, given the prompt “A soulful R&B song that is self-referentially about how the song is an example of AI-generated audio output on a wiki page about the capabilities of state-of-the-art AI systems”

Robotics

- Although they are prone to occasional mistakes, self-driving cars are able to drive with human supervision.36)

- In 2022, an AI-piloted drone won multiple races against three world-champion human drone pilots. 37)

- A robot made by OpenAI in 2019 can solve a rubik’s cube with one human-like hand.38)

- In 2022, a robot successfully performed laparoscopic surgery on four pigs, without human assistance.39)

- Atlas, a humanoid robot, can walk, run, and perform parkour moves such as backflips.40)

A demo of the robot Atlas performing parkour.

Biology

- Given a sequence of amino acids, AlphaFold 2, from 2022, can predict a 3d model of the protein that they make up.41) In CASP14 (Critical Assessment of Structure Prediction), AlphaFold 2’s predicted structures had a “median backbone accuracy of 0.96 Å r.m.s.d.95 (Cα root-mean-square deviation at 95% residue coverage) (95% confidence interval = 0.85–1.16 Å).”42)

- In 2022 a model was able to predict the effect of a molecule on levels of an enzyme in humans and find molecules that inhibit a particular enzyme.43)

- MinD-Vis, from 2022, can decode a subject’s brain activity to reconstruct an image that has some of the details and features of the image the subject is looking at.44)

Mathematics

- In 2022, AlphaTensor discovered efficient new algorithms for matrix multiplication, including an algorithm that broke a 50-year record in efficiency for 4×4 matrices in a finite field.45)

- In 2024, AlphaGeometry solved 25 out of 30 Olympiad-level geometry problems, approaching the level of an Olympiad gold medalist.46)

1)

Silver, D., Hubert, T., Schrittwieser, J., Antonoglou, I., Lai, M., Guez, A., Lanctot, M., Sifre, L., Kumaran, D., Graepel, T., Lillicrap, T., Simonyan, K., & Hassabis, D. (2017). Mastering Chess and Shogi by Self-Play with a General Reinforcement Learning Algorithm. arXiv. https://doi.org/10.48550/arXiv.1712.01815

2)

Schrittwieser, J., Antonoglou, I., Hubert, T., Simonyan, K., Sifre, L., Schmitt, S., Guez, A., Lockhart, E., Hassabis, D., Graepel, T., Lillicrap, T., & Silver, D. (2019). Mastering Atari, Go, Chess and Shogi by Planning with a Learned Model. arXiv. https://doi.org/10.1038/s41586-020-03051-4

3)

Wang, Tony Tong, Adam Gleave, Nora Belrose, Tom Tseng, Joseph Miller, Michael D. Dennis, Yawen Duan, Viktor Pogrebniak, Sergey Levine, and Stuart Russell. “Adversarial Policies Beat Professional-Level Go AIs.” arXiv preprint arXiv:2211.00241 (2022).

4)

Badia, A. P., Piot, B., Kapturowski, S., Sprechmann, P., Vitvitskyi, A., Guo, D., & Blundell, C. (2020). Agent57: Outperforming the Atari Human Benchmark. arXiv. https://doi.org/10.48550/arXiv.2003.13350

5)

Ye, W., Liu, S., Kurutach, T., Abbeel, P., & Gao, Y. (2021). Mastering Atari Games with Limited Data. arXiv. https://doi.org/10.48550/arXiv.2111.00210

6)

Facebook, Carnegie Mellon build first AI that beats pros in 6-player poker. Meta AI. (2019, July 11). Retrieved November 22, 2022, from https://ai.facebook.com/blog/pluribus-first-ai-to-beat-pros-in-6-player-poker

7)

Wiggers, K. (2019, April 13). Openai five defeats professional dota 2 team, twice. VentureBeat. Retrieved November 22, 2022, from https://venturebeat.com/ai/openai-five-defeats-a-team-of-professional-dota-2-players

8)

Wiggers, K. (2019, April 22). OpenAI's Dota 2 bot defeated 99.4% of players in public matches. VentureBeat. Retrieved November 22, 2022, from https://venturebeat.com/ai/openais-dota-2-bot-defeated-99-4-of-players-in-public-matches

9)

Alphastar: Grandmaster level in starcraft II using multi-agent reinforcement learning. DeepMind. (2019, October 30). Retrieved November 22, 2022, from https://www.deepmind.com/blog/alphastar-grandmaster-level-in-starcraft-ii-using-multi-agent-reinforcement-learning

10)

Hafner, D., Pasukonis, J., Ba, J., & Lillicrap, T. (2023). Mastering Diverse Domains through World Models. arXiv. https://doi.org/10.48550/arXiv.2301.04104

11)

Mastering Stratego, the Classic Game of Imperfect Information. DeepMind blog. (2022, December 1). Retrieved December 2, 2022, from https://www.deepmind.com/blog/mastering-stratego-the-classic-game-of-imperfect-information

12)

Cicero. Meta AI. (n.d.). Retrieved November 23, 2022, from https://ai.facebook.com/research/cicero

13)

, 19)

GPT-4. (2023, March 14). OpenAI. Retrieved May 26, 2023, from https://openai.com/research/gpt-4

14)

Chowdhery, A., Narang, S., Devlin, J., Bosma, M., Mishra, G., Roberts, A., Barham, P., Chung, H. W., Sutton, C., Gehrmann, S., Schuh, P., Shi, K., Tsvyashchenko, S., Maynez, J., Rao, A., Barnes, P., Tay, Y., Shazeer, N., Prabhakaran, V., . . . Fiedel, N. (2022). PaLM: Scaling Language Modeling with Pathways. arXiv. https://doi.org/10.48550/arXiv.2204.02311

15)

Caswell, I., & Liang, B. (2020, June 8). Recent advances in Google Translate. Google AI Blog. Retrieved November 22, 2022, from https://ai.googleblog.com/2020/06/recent-advances-in-google-translate.html

16)

Elicit FAQ. Elicit.org. (2022, April). Retrieved November 22, 2022, from https://elicit.org/faq

17)

Assael, Y., Sommerschield, T., Shillingford, B., Bordbar, M., Pavlopoulos, J., Chatzipanagiotou, M., Androutsopoulos, I., Prag, J., & de Freitas, N. (2022). Restoring and attributing ancient texts using deep neural networks. Nature, 603(7900), 280–283. https://doi.org/10.1038/s41586-022-04448-z

18)

Nestor Maslej, Loredana Fattorini, Erik Brynjolfsson, John Etchemendy, Katrina Ligett, Terah Lyons,

James Manyika, Helen Ngo, Juan Carlos Niebles, Vanessa Parli, Yoav Shoham, Russell Wald, Jack Clark,

and Raymond Perrault, “The AI Index 2023 Annual Report,” AI Index Steering Committee,

Institute for Human-Centered AI, Stanford University, Stanford, CA, April 2023.

20)

Lu, C., & Tang, X. (2014). Surpassing Human-Level Face Verification Performance on LFW with GaussianFace. arXiv. https://doi.org/10.48550/arXiv.1404.3840

21)

Assael, Y. M., Shillingford, B., Whiteson, S., & de Freitas, N. (2016). LipNet: End-to-End Sentence-level Lipreading. arXiv. https://doi.org/10.48550/arXiv.1611.01599

22)

AI Imaging & Diagnostics. Google Health. (n.d.). Retrieved November 22, 2022, from https://health.google/health-research/imaging-and-diagnostics/

23)

Radford, A., Kim, J. W., Hallacy, C., Ramesh, A., Goh, G., Agarwal, S., Sastry, G., Askell, A., Mishkin, P., Clark, J., Krueger, G., & Sutskever, I. (2021). Learning Transferable Visual Models From Natural Language Supervision. arXiv. https://doi.org/10.48550/arXiv.2103.00020

24)

Betker, James, et al. (2023, October). Improving Image Generation with Better Captions. OpenAI. Retrieved October 31, 2023, from https://cdn.openai.com/papers/dall-e-3.pdf

25)

Muse: Text-To-Image Generation via Masked Generative Transformers, https://muse-model.github.io/ . Accessed 9 January 2023.

26)

Perov, I., Gao, D., Chervoniy, N., Liu, K., Marangonda, S., Umé, C., Dpfks, M., Facenheim, C. S., RP, L., Jiang, J., Zhang, S., Wu, P., Zhou, B., & Zhang, W. (2020). DeepFaceLab: Integrated, flexible and extensible face-swapping framework. arXiv. https://doi.org/10.48550/arXiv.2005.05535

27)

Karras, T., Laine, S., Aittala, M., Hellsten, J., Lehtinen, J., & Aila, T. (2019). Analyzing and Improving the Image Quality of StyleGAN. ArXiv. https://doi.org/10.48550/arXiv.1912.04958

28)

(2023, December 25). Transformers of the handwritten word. https://mbzuai.ac.ae/news/transformers-of-the-handwritten-word/

29)

Kondratyuk, D., Yu, L., Gu, X., Lezama, J., Huang, J., Hornung, R., Adam, H., Akbari, H., Alon, Y., Birodkar, V., Cheng, Y., Chiu, M., Dillon, J., Essa, I., Gupta, A., Hahn, M., Hauth, A., Hendon, D., Martinez, A., . . . Jiang, L. (2023). VideoPoet: A Large Language Model for Zero-Shot Video Generation. ArXiv. /abs/2312.14125

30)

Shen, J., & Pang, R. (2017, December 19). Tacotron 2: Generating human-like speech from text. Google AI Blog. Retrieved November 23, 2022, from https://ai.googleblog.com/2017/12/tacotron-2-generating-human-like-speech.html

31)

Radford, A., Kim, J. W., Xu, T., Brockman, G., McLeavey, C., & Sutskever, I. (2022). Robust Speech Recognition via Large-Scale Weak Supervision. Retrieved November 22, 2022, from https://cdn.openai.com/papers/whisper.pdf

32)

AudioLM. Retrieved February 27, 2023, from https://google-research.github.io/seanet/audiolm/examples/

33)

Arik, S. O., Chen, J., Peng, K., Ping, W., & Zhou, Y. (2018). Neural Voice Cloning with a Few Samples. arXiv. https://doi.org/10.48550/arXiv.1802.06006

34)

Koe: Recast. Koe AI. (n.d.). Retrieved November 22, 2022, from https://koe.ai/recast

35)

Suno.ai. Retrieved January 3, 2024, from https://www.suno.ai/

36)

Metz, C., Laffin, B., & Thi, H. D. (2022, November 15). What riding in a self-driving Tesla tells us about the future of autonomy. The New York Times. Retrieved November 22, 2022, from https://www.nytimes.com/interactive/2022/11/14/technology/tesla-self-driving-flaws.html

37)

Edwards, Benj. (2023, August 31). High-speed AI drone beats world-champion racers for the first time. Ars Technica. Retrieved October 31, 2023, from https://arstechnica.com/information-technology/2023/08/high-speed-ai-drone-beats-world-champion-racers-for-the-first-time/

38)

Akkaya, I., Andrychowicz, M., Chociej, M., Litwin, M., McGrew, B., Petron, A., Paino, A., Plappert, M., Powell, G., Ribas, R., Schneider, J., Tezak, N., Tworek, J., Welinder, P., Weng, L., Yuan, Q., Zaremba, W., & Zhang, L. (2019). Solving Rubik's Cube with a Robot Hand. arXiv. https://doi.org/10.48550/arXiv.1910.07113

39)

Gregory, A. (2022, January 26). Robot successfully performs keyhole surgery on pigs without human help. The Guardian. Retrieved November 22, 2022, from https://www.theguardian.com/technology/2022/jan/26/robot-successfully-performs-keyhole-surgery-on-pigs-without-human-help

40)

Atlas™. Boston Dynamics. (n.d.). Retrieved November 22, 2022, from https://www.bostondynamics.com/atlas

41)

Alphafold. DeepMind. (n.d.). Retrieved November 22, 2022, from https://www.deepmind.com/research/highlighted-research/alphafold

42)

Jumper, J., Evans, R., Pritzel, A., Green, T., Figurnov, M., Ronneberger, O., Tunyasuvunakool, K., Bates, R., Žídek, A., Potapenko, A., Bridgland, A., Meyer, C., Kohl, S. A., Ballard, A. J., Cowie, A., Romera-Paredes, B., Nikolov, S., Jain, R., Adler, J., … Hassabis, D. (2021). Highly accurate protein structure prediction with alphafold. Nature, 596(7873), 583–589. https://doi.org/10.1038/s41586-021-03819-2

43)

Urbina, F., Lentzos, F., Invernizzi, C., & Ekins, S. (2022). Dual use of artificial-intelligence-powered drug discovery. Nature Machine Intelligence, 4(3), 189–191. https://doi.org/10.1038/s42256-022-00465-9

44)

Seeing beyond the brain: Conditional diffusion model with sparse masked modeling for vision decoding submitted to Anonymous Conference. MinD-Vis. (n.d.). Retrieved November 22, 2022, from https://mind-vis.github.io

45)

Fawzi, A., Balog, M., Huang, A., Hubert, T., Barekatain, M., Novikov, A., J., F., Schrittwieser, J., Swirszcz, G., Silver, D., Hassabis, D., & Kohli, P. (2022). Discovering faster matrix multiplication algorithms with reinforcement learning. Nature, 610(7930), 47-53. https://doi.org/10.1038/s41586-022-05172-4

46)

Trinh, T. H., Wu, Y., Le, Q. V., He, H., & Luong, T. (2024). Solving olympiad geometry without human demonstrations. Nature, 625(7995), 476-482. https://doi.org/10.1038/s41586-023-06747-5

uncategorized/capabilities_of_sota_ai.txt · Last modified: 2024/12/10 21:50 by harlanstewart