Table of Contents

Historic trends in light intensity

Published 07 February, 2020; last updated 23 April, 2020

Maximum light intensity of artificial light sources has discontinuously increased once that we know of: argon flashes represented roughly 1000 years of progress at past rates.

Annual growth in light intensity increased from an average of roughly 0.4% per year between 424BC and 1943 to an average of roughly 190% per year between 1943 and the end of our data in 2008.

Details

This case study is part of AI Impacts’ discontinuous progress investigation.

Background

That which is uncited on this page is our understanding, given familiarity with the topic.1

Electromagnetic waves (also called electromagnetic radiation) are composed of oscillating electric and magnetic fields. They span in wavelength from gamma rays with wavelengths on the order of 10-20 meters to radio waves with wavelengths on the order of kilometers. The wavelengths from roughly 400 to 800 nanometers are visible to the human eye, and usually referred to as light waves, though the entire spectrum is sometimes referred to as light, especially in the context of physics. These waves carry energy and their usefulness and the effect that they have on matter is strongly affected by their intensity, or the amount of energy that they carry to a given area per time. Intensity is often measured in watts per square centimeter (W/cm2), and it can be increased either by increasing the power (energy per time, measured in watts) or focusing the light onto a smaller area.

Electromagnetic radiation is given off by all matter as thermal radiation, with the power and wavelength of the waves determined by the temperature and material properties of the matter. When the matter is hot enough to emit visible light, as is the case with the tungsten filament in a light bulb or the sun, the process is referred to as incandescence. Processes which produce light by other means are commonly referred to as luminescence. Common sources of luminescence are LEDs and fireflies.

The total power emitted by a source of incandescent source of light is given by the Stefan-Boltzman Law.2

Light intensity is relevant to applications such as starting fires with lenses, cutting with lasers, plasma physics, spectroscopy, and high-speed photography.

History of progress

Focused sunlight and magnesium

For much of history, our only practical sources of light have been the sun and burning various materials. In both cases, the light is incandescent (produced by a substance being hot), so light intensity depends on the temperature of the hot substance. It is difficult to make something as hot as the sun, so difficult to make something as bright as sunlight, even if it is very well focused. We do not know how close the best focused sunlight historically was to the practical limit, but focused sunlight was our most intense source of light for most of human history.

There is evidence that people have been using focused sunlight to start fires for a very long time.3 There is further evidence that more advanced lens technology has existed for over 1000 years4, so that humans have been able to focus sunlight to near the theoretical limit5 for a very long time. Nonetheless, it appears that nobody fully understood how lenses worked until the 17th century, and classical optics continued to advance well into the 19th and 20th century. So it seems likely that there were marginal improvements to be made in more recent times. In sum, we were probably slowly approaching an intensity limit for focusing sunlight for a very long time. There is no particular reason to think that there were any sudden jumps in progress during this time, but we have not investigated this.

Magnesium is the first combustible material that we found that we are confident burns substantially brighter than crudely focused sunlight, and for which we have an estimated date of first availability. It was first isolated in 18086, and burns with a temperature of 3370K7. Magnesium was bright enough and had a broad enough spectrum to be useful for early photography.

Mercury Arc Lamp

The first arc lamp was invented as part of the same series of experiments that isolated magnesium. Arc lamps generate light by using an electrical current to generate a plasma8, which emits light due to a combination of luminescence and incandescence. Although they seem to have been the first intense artificial light sources that do not rely on high combustion temperature9, they do not seem to have been brighter than a magnesium flame10 in the early stages of their development. Nonetheless, by the mid 1930s, mercury arc lamps, operated in glass tubes filled with particular gases, were the brightest sources available that we found. Our impression is that progress was incremental between their first demonstration around 1800 and their implementation as high intensity sources in the the 1930s, but we have not investigated this thoroughly.

Argon Flashes

Argon flashes were invented during the Manhattan project11 to enable the high speed photography that was needed for understanding plutonium implosions. They are created by surrounding a high explosive with argon gas. The shock from the explosive ionizes the argon, which then gives off a lot of UV light as it recombines. The UV light is absorbed by the argon, and because argon has a low heat capacity (that is, takes very little energy to become hot), it becomes extremely hot, emitting ~25000 Kelvin blackbody radiation. This was a large improvement in intensity of light from blackbody radiation. There does not seem to have been much improvement in blackbody sources in the 60 years since.

Lasers

Lasers work by storing energy in a material by promoting electrons into higher energy states, so that the energy can then be used to amplify light that passes through the material. Because lasers can amplify light in a very controlled way, they can be used to make extremely short, high energy pulses of light, which can be focused onto a very small area. Because lasers are not subject to the same thermodynamic limits as blackbody sources, it is possible to achieve much higher intensities, with the current state of the art lasers creating light 16 orders of magnitude more intense than the light from an argon flash.

Trends

Light intensity

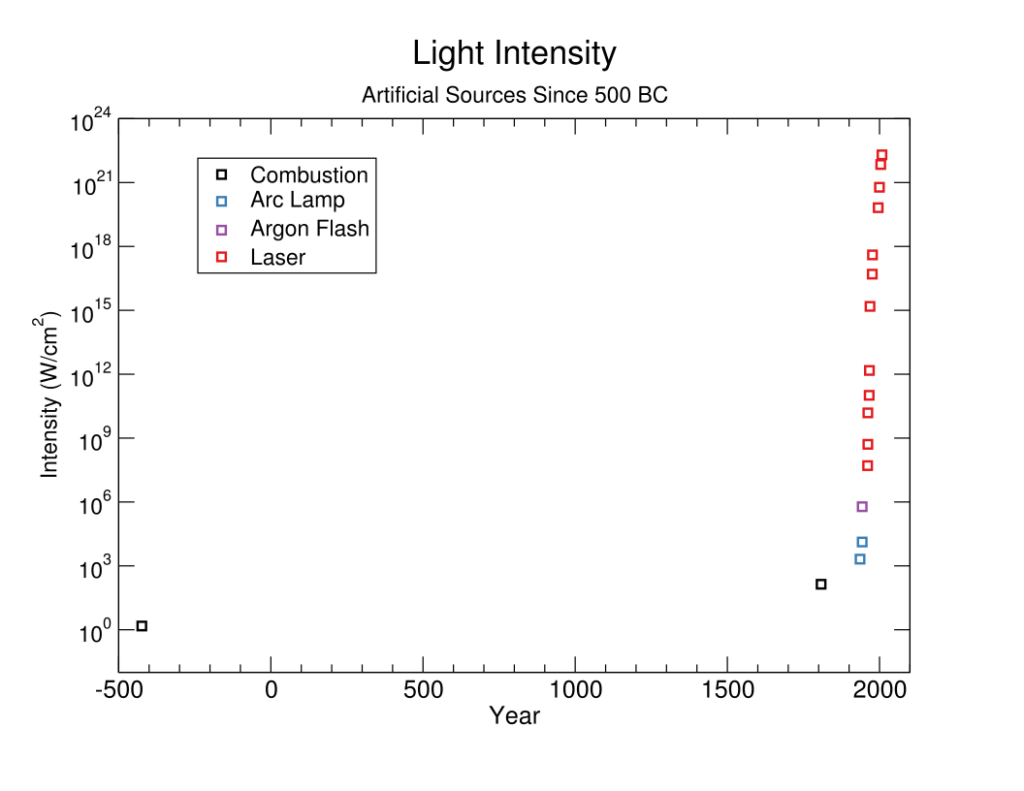

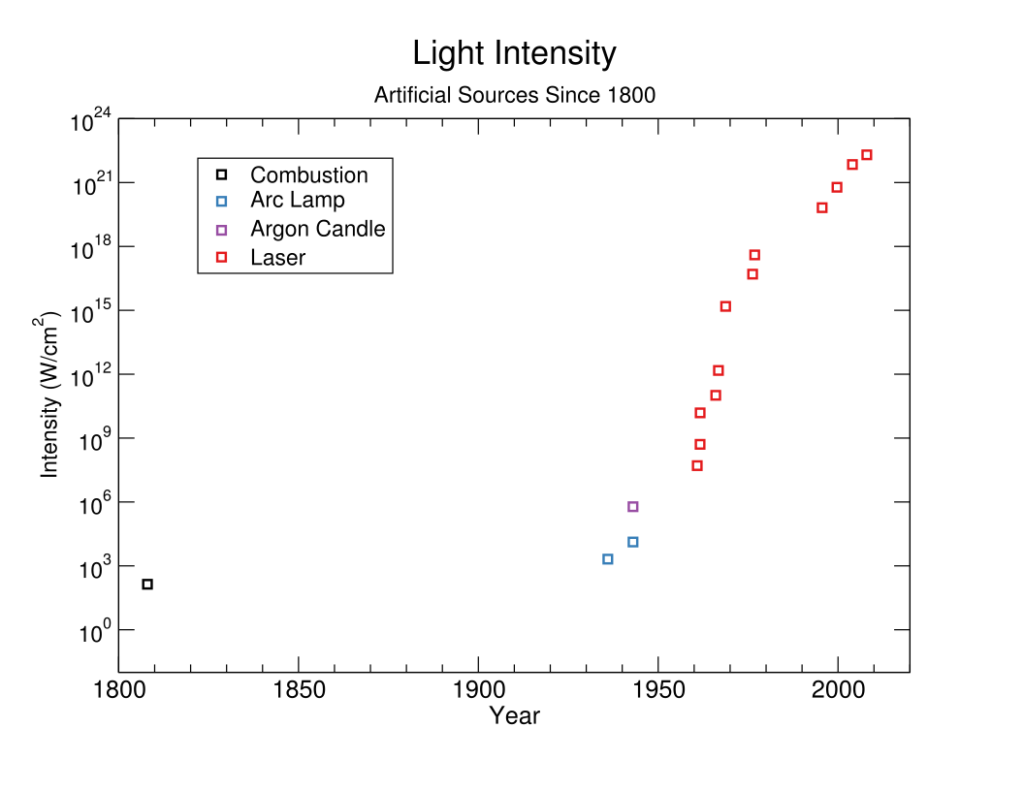

We investigated the highest publicly recorded light intensities we could find, over time.13 Our estimates are for all light, not just the visible spectrum.

Data

One of our researchers, Rick Korzekwa, collected estimated light intensities produced by new technologies over time into this spreadsheet. Many sources lacked records of the intensity of light produced specifically, so the numbers are often inferred or estimated from available information. These inferences rely heavily on subject matter knowledge, so have not been checked by another researcher. Figures 2-3 illustrate this data.

Pre-1808 trend

We do not start looking for discontinuities until 1943, though we have data from beforehand, because our data is not sufficiently complete to distinguish discontinuous progress from continuous, only to suggest the rough shape of the longer term trend.

Together, focused sunlight and magnesium give us a rough trend for slow long term progress, from lenses focusing to the minimum intensity required to ignite plant material in ancient times to intensities similar to a camera flash over the course of at least two millenia. On average during that time, the brightest known lights increased in intensity by a factor of 1.0025 per year (though we do not know how this was distributed among the years).

Due to our uncertainty in the early development of optics for focusing sunlight, the trend from 424 BC to 1808 AD should be taken as the most rapid progress that we believe was likely to have occurred during that period. That is, we look at the earliest date for which we have strong verification that burning glasses were used, and assuming these burning glasses produced light that was just barely intense enough to start a fire. So progress may have been slower, if more intense light was available in 424 BC than we know about, however progress could only have been faster on average if burning glass (that could actually burn) didn’t exist in 424 BC, or if there were better things available in 1808 than we are aware, both of which seem less likely than that technology was better than that in 424 BC.

Discontinuity measurement

We treat the rate of previous progress as an exponential between the burning glass in 424BC and the first argon candle in 1943. At that point progress has been far above that long term trend for two points in a row, so we assume a new faster trend and measure from the 1936 arc lamp. In 1961, after the trend again has been far surpassed for two points, we start again measuring from the first laser in 1960. See this project’s methodology page for more detail on what we treat as past progress.

Given these choices, we find one large discontinuity from the first argon candle in 1943 (~1000 years of progress in one step), and no other discontinuities of more than ten years since we begin searching in 1943.14

In addition to the size of these discontinuity in years, we have tabulated a number of other potentially relevant metrics here.15

Note on mercury arc lamp

The 1936 mercury arc lamp would be a large discontinuity if there were no progress since 1808. Our impression from various sources is that progress in arc lamp technology was incremental between their first invention at the beginning of the 19th century and the bright mercury lamps that were available in 1936. We did not thoroughly investigate the history and development of arc lamps however, so do not address the question of the first year that such lamps were available or whether such lamps represented a discontinuity.

Note on argon flash

The argon flash seems to have been the first light source available that is brighter than focused sunlight, after centuries of very slow progress, and represents a large discontinuity. As discussed above, because we are less certain about the earlier data, our methods imply a relatively high estimate on the prior rate of advancement, and thus a low estimate of the size of the discontinuity. So the real discontinuity is likely to be at least 996 years (unless for instance there was accelerating progress during that time that we did not find records of).

Change in rate of progress

Light intensity saw a large increase in the rate of progress, seemingly beginning somewhere between the arc lamps of the 30s and the lasers of the 60s. Between 424BC and 1943, light intensity improved by around 0.4% per year on average, optimistically. Between 1943 and 2008, light intensity grew by an average of around 190% per year.16

The first demonstrations of working lasers seems to have prompted a flurry of work. For the first fifteen years, maximum light intensity had an average doubling time of four months, and over roughly five decades following lasers, the average doubling time was a year.17

Discussion

Factors of potential relevance to causes of abrupt progress

Technological novelty

One might expect discontinuous progress to arise from particularly paradigm-shifting insights, where a very novel way is found to achieve an old goal. This has theoretical plausibility, and several discontinuities that we know of seem to be associated with fundamentally new methods (for instance, nuclear weapons came from a shift to a new type of energy, high temperature superconductors with a shift to a new class of materials for superconducting). So we are interested in whether discontinuities in light intensity are evidence for or against such a pattern.

The argon flash was a relatively novel method rather than a subtle refinement of previous technology, however it did not leverage any fundamentally new physics. Like previous light sources, it works by adding a lot of energy into a material to make it emit light in a relatively disorganized and isotropic manner. Achieving this by way of a shockwave from a high explosive was new.

It is unclear whether using an explosive shockwave in this way had not been done previously because nobody had thought of it, or because nobody wanted a shorter and brighter flash of light so much that they were willing to use explosives to get it.18

The advent of lasers did not produce a substantial discontinuity, but they did involve an entirely different mechanism for creating light to previous technologies. Older methods created more intense light by increasing the energy density of light generation (which mostly meant making the thing hotter), but lasers do it by creating light in a very organized way. Most high intensity lasers take in a huge amount of light, convert a small portion of it to laser light, and create a laser pulse that is many orders of magnitude more intense than the input light. This meant that lasers could scale to extremely high output power without becoming so hot that the output is that of a blackbody.

Effort directed at progress on the metric

There is a hypothesis that metrics which see a lot of effort directed at them will tend to be more continuous than those which are improved as a side-effect of other efforts. So we are interested in whether these discontinuities fit that pattern.

Though there was interest over the years in using intense light as a weapon19, and for early photographers, who wanted safe, convenient, short flashes that could be fired in quick succession, there seems to have been relatively little interest in increasing the peak intensity of a light source. The US military sought bright sources of light for illuminating aircraft or bombing targets at night during World War II. But most of the literature seems to focus on the duration, total quantity of light, or practical considerations, with peak intensity as a minor issue at most.

The argon flash appears to have been developed more as a high peak power device than as a high peak intensity device.20 It did not matter if the light could be focused to a small spot, so long as enough light was given off during the course of an experiment to take pictures. Still, you can only drive power output up so much before you start driving up intensity as well, and the argon flash was extremely high power.

Possibly argon flashes were developed largely because an application appeared which could make use of very bright lights even with the concomitant downsides.

There seems to have been a somewhat confusing lack of interest in lasers, even after they looked feasible, in part due to a lack of foresight into their usefulness. Charles Townes, one of the scientists responsible for the invention of the laser, remarked that it could have been invented as early as 193021, so it seems unlikely that it was held up by a lack of understanding of the fundamental physics (Einstein first proposed the basic mechanism in 191722). Furthermore, the first paper reporting successful operation of a laser was rejected in 1960, because the reviewers/editors did not understand how it was importantly different from previous work.23

Although it seems clear that the scientific community was not eagerly awaiting the advent of the laser, there did seem to be some understanding, at least among those doing the work, that lasers would be powerful. Townes recalled that, before they finished building their laser, they did expect to “at least get a lot of power”24, something which could be predicted with relatively straightforward calculations. Immediately after the first results were published, the general sentiment seems to have been that it was novel and interesting, but it was allegedly described as “a solution in search of a problem”.25 Similar to the argon flash, it would appear that intensity was not a priority in itself at the time the laser was invented, and neither were any of the other features of laser light that are now considered valuable, such as narrow spectrum, short pulse duration, and long coherence length.

Most of the work leading to the first lasers was focused on the associated atomic physics, which may help explain why the value of lasers for creating macroscopic quantities of light wasn’t noticed until after they had been built.

In sum, it seems the argon flash and the laser both caused large jumps in a metric that is relevant today but that was not a goal at the time of their development. Both could probably have been invented sooner, had there been interest.

Predictability

One reason to care about discontinuities is because they might be surprising, and so cause instability or problems that we are not prepared for. So we are interested in whether discontinuities were in fact surprising.

It is unclear how predictable the large jump from the argon flash was. Our impression is that without knowledge of the field, it would have been difficult to predict the huge progress from the argon flash ahead of time. High explosives, arc lamps, and flash tubes all produced temperatures of around 4,000K to 5,000K. Jumping straight from that to >25,000K would probably have seemed rather unlikely.

However as discussed above, it seems plausible that the technology allowing argon flashes was relatively mature earlier on, and therefore that they might have been predictable to someone familiar with the area.

Notes

- “Specifically, the Stefan–Boltzmann law states that the total energy radiated per unit surface area of a black body across all wavelengths per unit time j* (also known as the black-body radiant emittance) is directly proportional to the fourth power of the black body’s thermodynamic temperature T…”“Stefan–Boltzmann Law.” In Wikipedia, September 25, 2019. https://en.wikipedia.org/w/index.php?title=Stefan%E2%80%93Boltzmann_law&oldid=917706970.

- “The technology of the burning glass has been known since antiquity. Vases filled with water used to start fires were known in the ancient world.” – “Burning Glass.” In Wikipedia, September 15, 2019. https://en.wikipedia.org/w/index.php?title=Burning_glass&oldid=915774651.

- The Visby lenses are a collection of lens-shaped manufactured objects made of rock crystal (quartz) found in several Viking graves on the island of Gotland, Sweden, and dating from the 11th or 12th century…

…The Visby lenses provide evidence that sophisticated lens-making techniques were being used by craftsmen over 1,000 years ago, at a time when researchers had only just begun to explore the laws of refraction…

“Visby Lenses.” In Wikipedia, September 19, 2019. https://en.wikipedia.org/w/index.php?title=Visby_lenses&oldid=916644137. - It may seem like it is possible to focus sunlight to an arbitrary intensity, but this turns out not to be the case. Due to thermodynamic and optical constraints, it is not possible to focus light from an incoherent source such as the sun to an intensity brighter than the source itself. Rick has written about this here. In practice, the limit is around 50% of the intensity of the source.

- “The metal itself was first isolated by Sir Humphry Davy in England in 1808.” “Magnesium.” In Wikipedia, October 17, 2019. https://en.wikipedia.org/w/index.php?title=Magnesium&oldid=921795645.

- “The maximum measured combustion temperature is about 3100°C, which is very close to the magnesium adiabatic flame temperature in air, ca. 3200°C” Dreizin, Edward L., Charles H. Berman, and Edward P. Vicenzi. “Condensed-Phase Modifications in Magnesium Particle Combustion in Air.” Scripta Materialia, n.d., 10–1016.

- An example of a low-intensity artificial light source that does not rely on combustion might be a luminescent chemical reaction, such as when phosphorous is exposed to air.

- “To study the implosion design at Los Alamos’ Anchor Ranch site and later the Trinity Site, Optics group members and scientists developed new and improved photographic techniques. These techniques included rotating prism and rotating mirror photography, high-explosive flash (“argon bomb”) photography, and flash x-ray photography.”

Atomic Heritage Foundation. “High-Speed Photography.” Accessed November 8, 2019. https://www.atomicheritage.org/history/high-speed-photography.

- From Wikimedia Commons: Metaveld BV [CC BY-SA 3.0 (https://creativecommons.org/licenses/by-sa/3.0)]

- It is plausible that the most intense light to exist (rather than to be be recorded) increased gradually but extremely fast at times, rather than discontinuously in a strict sense. This is because the intensity of a source is sometimes ramped up gradually in the lab (though for our purposes these are similar).

- See the methodology page for more detail on how we calculate discontinuities.

- See our methodology page for more details.

- See spreadsheet for calculations.

- See spreadsheet for calculations.

- Both the film industry and the explosives industry were publishing papers suggesting a need for very short and bright flashes of light for high speed photography in the 1910’s and 1920’s, but most of the work focused on repeatability, convenience, and total quantity of light, rather than peak power output or intensity.

- “Archimedes, the renowned mathematician, was said to have used a burning glass as a weapon in 212 BC, when Syracuse was besieged by Marcus Claudius Marcellus. The Roman fleet was supposedly incinerated, though eventually the city was taken and Archimedes was slain. The legend of Archimedes gave rise to a considerable amount of research on burning glasses and lenses until the late 17th century. ” “Burning Glass.” In Wikipedia, September 15, 2019. https://en.wikipedia.org/w/index.php?title=Burning_glass&oldid=915774651.

- “This raises the question: why weren’t lasers invented long ago, perhaps by 1930 when all the necessary physics was already understood, at least by some people?”

“The First Laser.” Accessed November 9, 2019. https://www.press.uchicago.edu/Misc/Chicago/284158_townes.html. - In 1917, Albert Einstein established the theoretical foundations for the laser and the maser in the paper Zur Quantentheorie der Strahlung (On the Quantum Theory of Radiation)

“Laser.” In Wikipedia, November 4, 2019. https://en.wikipedia.org/w/index.php?title=Laser&oldid=924565157.

- “Theodore Maiman made the first laser operate on 16 May 1960 at the Hughes Research Laboratory in California, by shining a high-power flash lamp on a ruby rod with silver-coated surfaces. He promptly submitted a short report of the work to the journal Physical Review Letters, but the editors turned it down. Some have thought this was because the Physical Review had announced that it was receiving too many papers on masers—the longer-wavelength predecessors of the laser—and had announced that any further papers would be turned down. But Simon Pasternack, who was an editor of Physical Review Letters at the time, has said that he turned down this historic paper because Maiman had just published, in June 1960, an article on the excitation of ruby with light, with an examination of the relaxation times between quantum states, and that the new work seemed to be simply more of the same.”

“The First Laser.” Accessed November 9, 2019. https://www.press.uchicago.edu/Misc/Chicago/284158_townes.html.

- “Oral-History:Charles Townes (1991) – Engineering and Technology History Wiki.” Accessed November 9, 2019. https://ethw.org/Oral-History:Charles_Townes_(1991).

- Bertolotti, Mario. The History of the Laser. CRC Press, 2004. https://books.google.co.uk/books?id=JObDnEtzMJUC&pg=PA262&lpg=PA262&dq=laser+%22solution+in+search+of+a+problem%22&source=bl&ots=tzL8lw1cU5&sig=ACfU3U3uHXVfadjwktCm1SmPU7oYz66mlA&hl=en&sa=X&redir_esc=y#v=onepage&q=laser%20%22solution%20in%20search%20of%20a%20problem%22&f=false