Table of Contents

How much computing capacity exists in GPUs and TPUs in Q1 2023?

Published 3 April 2023, last updated 3 April 2023

(Dec 10 2024 Update: This analysis did not consider typical versus maximum performance in computing hardware. The data and figures presented here are likely based on maximum performance.)

A back-of-the-envelope calculation based on market size, price-performance, hardware lifespan estimates, and the sizes of Google’s data centers estimates that there is around 3.98 * 10^21 FLOP/s of computing capacity on GPUs and TPUs as of Q1 2023.

Details

Estimating dollars used to buy GPUs per year

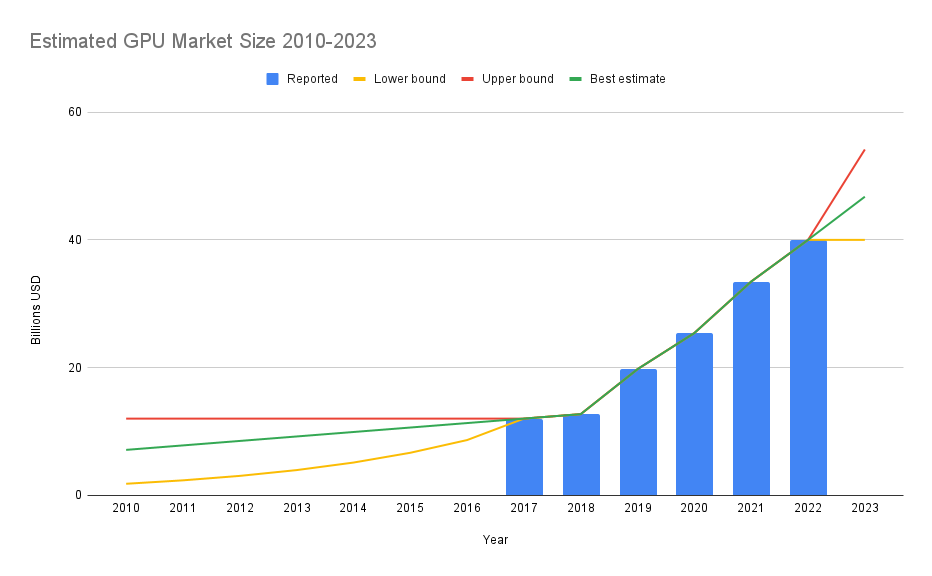

Consulting firms reported their estimates of the world-wide GPU market size for 2017, 2018, 2019, 2020, 2021, and 2022.1)2)3)4)5)6) Unfortunately, the details of these reports are paywalled, so the methodology and scope of these estimates is not completely clear. A sample of GMI’s report on 2022 GPU market size seems to indicate that the estimate is reasonably comprehensive.7)

- Our best guess estimate models market size growth as a piecewise linear function, with slow growth before 2017 and fast growth after 2017.

- Our lower bound estimate fits the years before 2017 to an exponential curve and assumes that the market size will not change between 2022 and 2023.

- Our upper bound estimate assumes that there was no change in market size between 2010 and 2017 and fits 2023 to an exponential curve.

Estimating FLOP/s per dollar GPUs had per year

Hobbhahn & Besiroglu (2022) used a dataset of GPUs to find trends in FLOP/s per dollar over time. This provides a simple way to convert the above estimates of total spending on GPUs per year into estimates of total GPU computing capacity produced per year. Hobbhahn & Besiroglu found no statistically significant difference in the trends of FP16 and FP32 precision compute, and we follow their example by focusing on FP32 precision for the computing capacity of GPUs.8)

- Our best guess estimate for FLOP/s per dollar over time is based on the trendline over all of the data in the dataset.

- Our lower bound estimate is based on the trendline for machine learning GPUs

- Our upper bound estimate is based on the trendline for the most price-performance efficient GPUs

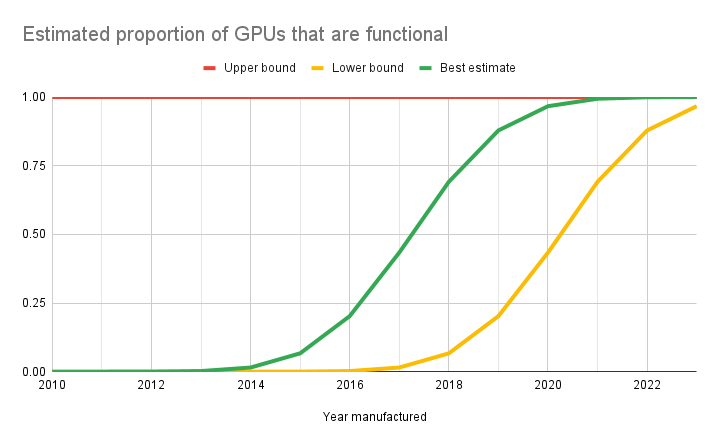

Estimating proportion of GPUs still functional per year

Because GPUs eventually fail, the computing capacity produced in previous years does not all still exist. We did not find much empirical data about the usual lifespan of GPUs, so the estimates in this section are highly speculative.

- Based on anecdotal claims that GPUs usually last 5 years with heavy use or 7 or more years with moderate use, our best guess estimate is that GPU lifespan is normally distributed with a mean of 6 years and a standard deviation of 1.5 years.9)

- Because Ostrouchov et al. (2017) show that GPUs in datacenters usually last around 3 years, our lower bound estimate has a mean of 3 years and a standard deviation of 1.5 years.10)

- For our upper bound estimate, we assumed that all GPUs since 2010 still exist.

Estimating FLOP/s of all GPUs

To estimate total GPU computing capacity, we summed the products of each years’ estimated market size, price-performance, and surviving GPUs.

- Our best guess estimate for total GPU computing capacity is 3.95 * 10^21 FLOP/s (FP32)

- Our lower bound estimate for total GPU computing capacity is 1.40 * 10^21 FLOP/s (FP32)

- Our upper bound estimate for total GPU computing capacity is 7.71 * 10^21 FLOP/s (FP32)

Estimating FLOP/s of all TPUs

TPUs are specialized chips designed by Google for machine learning. These chips can be rented through the cloud, and seem to be located in 7 Google data centers.11) There are 3 versions of the TPU available: V2, which has 45 * 10^12 FLOP/s per chip, V3, which has 123 * 10^12 FLOP/s per chip, and V4, which has 275 * 10^12 FLOP/s per chip.12)13) Google does not say exactly how many TPUs it owns, but the company did report that its data center in Oklahoma (the only with V4 chips available) had 9 * 10^18 FLOP/s of computing capacity.14)

- Based on assumptions that all of Google’s data centers that have TPUs have the same number of TPUs and that if a data center has two types of TPUs it has equal numbers of each, our best guess estimate for computing capacity of all TPUs is 2.93 * 10^19 FLOP/s (bf16)

- Because the only computing capacity publicly reported by Google is that of the Oklahoma data center, our lower bound estimate assumes that the 9 * 10^18 FLOP/s (bf16) is the total for all TPUs.

- Because Google claimed that their Oklahoma data center had more computing capacity than any other publicly available compute cluster, our upper bound estimate assumes that all 7 data centers have 9 * 10^18 FLOP/s of compute, for a total of 6.3 * 10^19 FLOP/s (bf16)

Estimated FLOP/s of all GPUs and TPUs

Although the above estimates for the computing capacity of all TPUs and for all GPUs are measured in different precision, bf16 and FP32 seem to be similar enough that computing capacities measured in both can reasonably be added together for a rough calculation.15) Adding the estimates together gives the below estimates.

- Our best guess estimate for computing capacity of all GPUs and TPUs is 3.98 * 10^21 FLOP/s

- Our lower bound estimate is 1.41 * 10^21 FLOP/s

- Our upper bound estimate is 7.77 * 10^21 FLOP/s

Discussion

This calculation is rough, and the estimates could be wrong. In particular, some uncertainty remains about the methods used by the cited consulting firms to estimate GPU market size, and the details of those methods might substantially change the estimates in either direction.

Google’s Pathways Language Model (PaLM) was trained using 2.56 * 10^24 FLOPs over 64 days.16) Our best guess estimate would indicate that training PaLM used about 0.01% of the world’s current GPU and TPU computing capacity, which seems plausible.17)

There are likely other, possibly better, ways that one could go about estimating the computing capacity of GPUs and TPUs. Estimations that are calculated in different ways could be useful for reducing uncertainty. Other methods might involve researching the manufacturing capacity of semiconductor fabrication plants. Other methods may also make it feasible to estimate the computing capacity of all microprocessors, including CPUs, rather than just GPUs and TPUs.