ai_timelines:trend_in_compute_used_in_training_for_headline_ai_results

Trend in compute used in training for headline AI results

Published 17 May, 2018

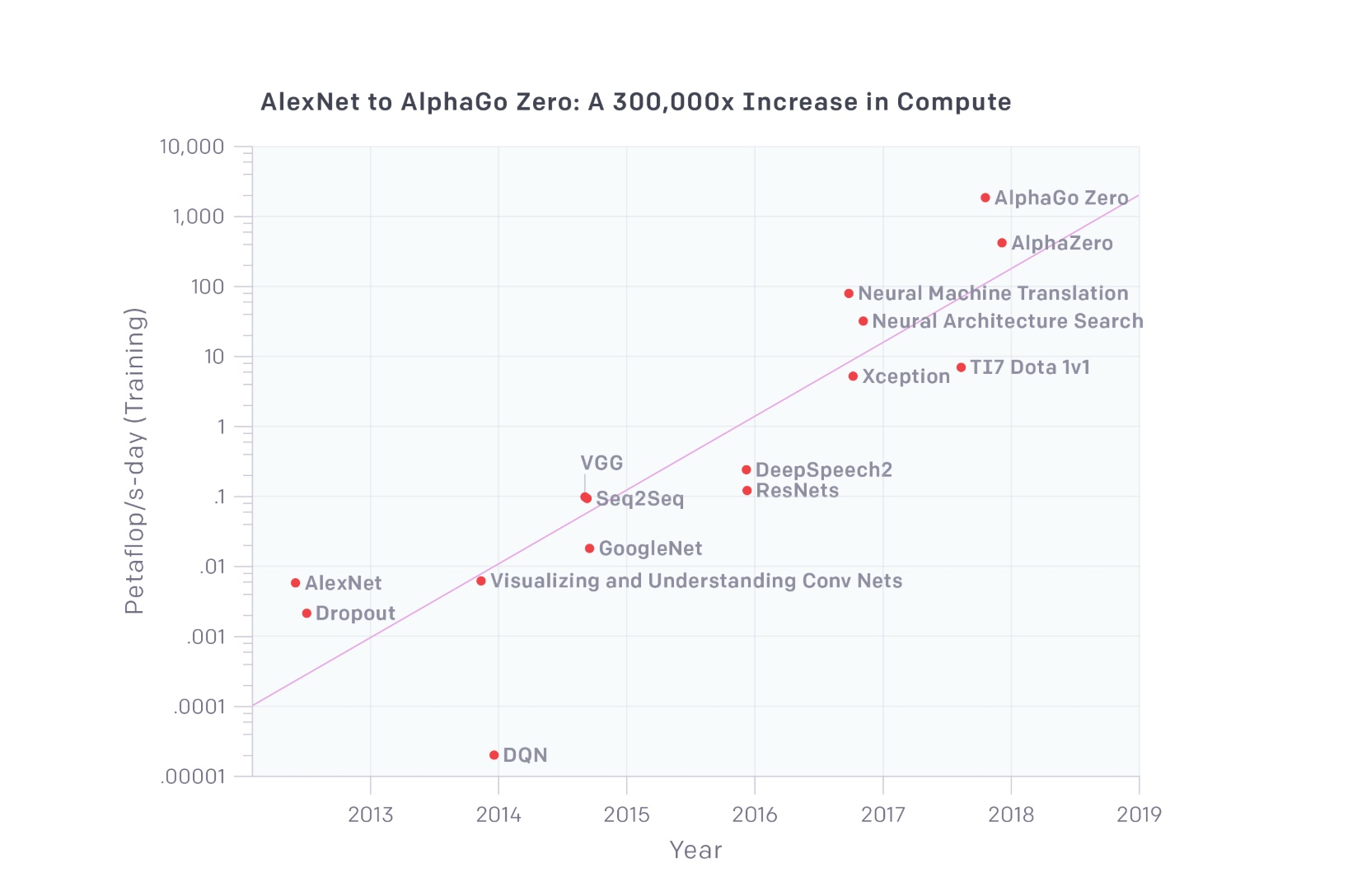

Compute used in the largest AI training runs appears to have roughly doubled every 3.5 months between 2012 and 2018.

Details

According to Amodei and Hernandez, on the OpenAI Blog:

…since 2012, the amount of compute used in the largest AI training runs has been increasing exponentially with a 3.5 month-doubling time (by comparison, Moore’s Law had an 18-month doubling period). Since 2012, this metric has grown by more than 300,000x (an 18-month doubling period would yield only a 12x increase)…

They give the following figure, and some of their calculations. We have not verified their calculations, or looked for other reports on this issue.

ai_timelines/trend_in_compute_used_in_training_for_headline_ai_results.txt · Last modified: 2022/09/21 07:37 (external edit)