Table of Contents

2023 Expert Survey on Progress in AI

Published 17 August, 2023. Last updated 29 January, 2024.

The 2023 Expert Survey on Progress in AI is a survey of 2,778 AI researchers that AI Impacts ran in October 2023.

Details

Background

The 2023 Expert Survey on Progress in AI (2023 ESPAI) is a rerun of the 2022 ESPAI and the 2016 ESPAI, previous surveys ran by AI Impacts in collaboration with others. Almost all of the questions in the 2023 ESPAI are identical to those in both the 2022 ESPAI and 2016 ESPAI.

A preprint about the 2023 ESPAI is available here.

Survey methods

Questions

The questions in the 2023 ESPAI are nearly identical to those in the 2022 ESPAI and the 2016 ESPAI. As in those surveys, different phrasings of some questions were randomly assigned to each respondent to measure the effects of framing differences. Each participant also received a randomized subset of certain question types and questions within certain types. Because of this, most questions have a significantly smaller number of responses than the total number of responses to the survey as a whole.

Some questions were added to the 2023 ESPAI which were not in either of the two previous surveys. We refined the new questions through an iterative process involving several rounds of testing the questions in verbal interviews with computer science graduate students and others.

Participants

We collected the names of authors who published in 2022 at a selection of top-tier machine learning conferences (NeurIPS, ICML, ICLR, AAAI, JMLR, and IJCAI), and were able to find email addresses for 20,066 (92%) of them. The resulting list of emails was put into a random order based on a random unique number assigned using Google Sheets' “Randomize range” feature. The first 1003 emails from the randomly ordered list (about 5% of the total) were assigned to a pilot study group to receive payment for participating, and the second 1003 emails from the list were assigned to a pilot study group to not receive payment for participating. The remainder of the emails were assigned to the main survey group

The pilot study took place from October 11 to October 15 in 2023. Based on the response rates in the paid group versus the unpaid group, we decided to offer payment to all survey participants. A $50 reward will be issued through a third-party service. Depending on a participant's country (as determined by IP address), participants will be able to use the third-party service to choose between a gift card, a pre-paid Mastercard, and a donation to their choice of 15 charities.

On October 15, the survey was sent to the main survey group. The survey remained open until October 24, 2023. Out of the 20,066 emails we contacted, 1,607 (8%) bounced or failed, leaving 18,459 functioning email addresses. We received 2,778 responses (where the person answered at least one question), for a response rate of 15%. 95% of these responses were deemed ‘finished’ by Qualtrics, which appears to correspond to the participant seeing the last question.

Changes from 2016 and 2022 ESPAI surveys

These are some notable differences from the 2022 Expert Survey on Progress in AI

- We recruited participants from twice as many conferences as in 2022.

- We made some changes to the order and flow of the questions.

- We gave some participants a new version of the extinction risk question that was phrased to include a timeframe (“within the next 100 years”).

- Some participants were given new questions that were not in the 2022 ESPAI.

Full data on differences between surveys is available here.

Definitions

- “Aggregate forecast”: A cumulative distribution function that combines year-probability pairs from both the fixed-years and fixed-probabilities framings. The aggregate forecast is found by fitting each individual response (which consists of three year-probability pairs) to a gamma CDF and then finding the average curve of those.

- “Fixed-probabilities framing”: A way of asking about how soon an AI milestone will be reached, by asking for an estimated year by which the milestone will be feasible with a given probability.

- “Fixed-years framing”: A way of asking about how soon an AI milestone will be reached, by asking for an estimated probability that the milestone will be reached by a given year.

- “Full automation of labor (FAOL)”: When for any occupation, machines could be built to carry out the task better and more cheaply than human workers.

- “High-level machine intelligence (HLMI)“: AI that can, unaided, accomplish every task better and more cheaply than human workers.

Results

The full dataset of anonymized responses to the survey is available here.

Timing of human-level performance

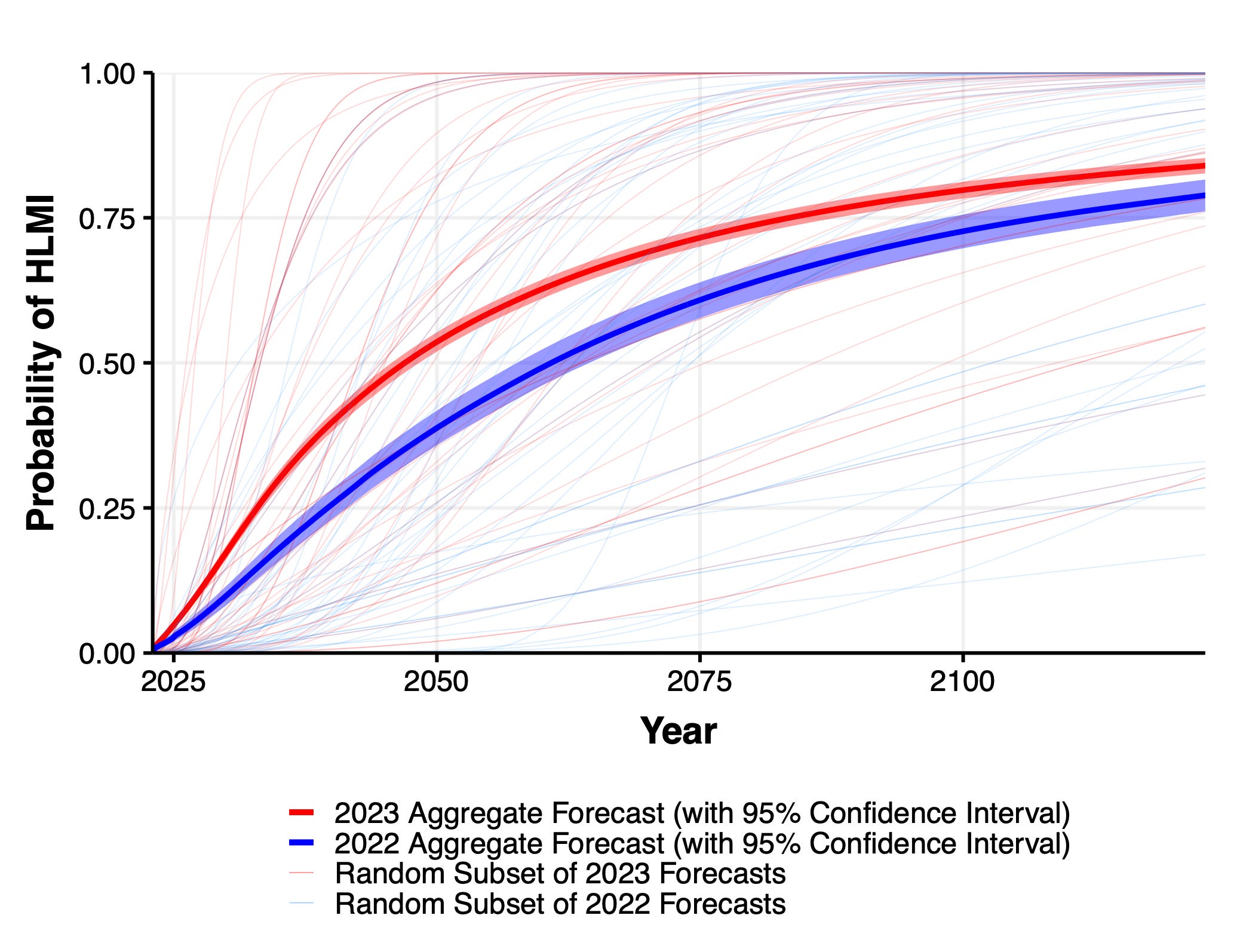

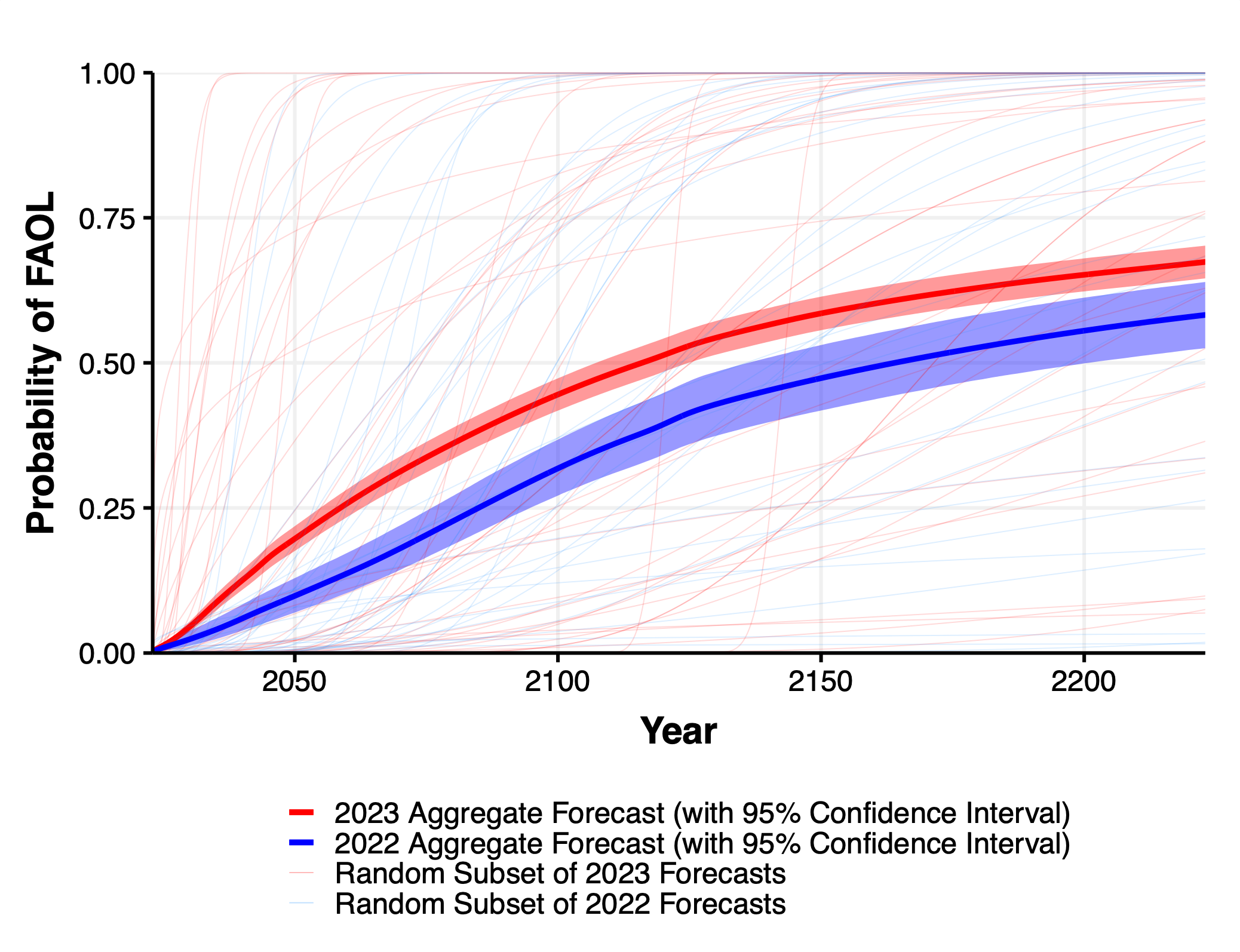

We asked about the timing of human-level performance by asking some participants about how soon they expect “high-level machine intelligence” (HLMI) and asking others about how soon they expect “full automation of labor” (FAOL). As in previous surveys, participants who were asked about FAOL tended to give significantly longer timelines than those asked about HLMI.

High-level machine intelligence (HLMI)

Say we have ‘high-level machine intelligence’ when unaided machines can accomplish every task better and more cheaply than human workers. Ignore aspects of tasks for which being a human is intrinsically advantageous, e.g. being accepted as a jury member. Think feasibility, not adoption.

For the purposes of this question, assume that human scientific activity continues without major negative disruption.

Half of the participants were asked about the number of years until they expected a 10%, 50%, and 90% probability of HLMI existing (fixed-probabilities framing) and half were asked about the probability they would give to HLMI existing in 10, 20, and 40 years (fixed-years framing).

We aggregated the 1714 responses to this question by fitting each response to a gamma CDF and finding the mean curve of those CDFs. The resulting aggregate forecast gives a 50% chance of HLMI by 2047, down thirteen years from 2060 in the 2022 ESPAI.

Full automation of labor (FAOL)

Say we have reached ‘full automation of labor’ when all occupations are fully automatable. That is, when for any occupation, machines could be built to carry out the task better and more cheaply than human workers.

As with the HLMI question, half of the participants who were given the FAOL question were asked using the fixed-probabilities framing and the other half was asked using the fixed-years framing.

The 774 responses to this question were used to create an aggregate forecast which gives a 50% chance of FAOL by 2116, down 48 years from 2164 in the 2022 ESPAI.

Intelligence explosion

We asked about the likelihood of an 'intelligence explosion' by asking each participant one of three related questions.

Chance that the intelligence explosion argument is about right

Some people have argued the following:

If AI systems do nearly all research and development, improvements in AI will accelerate the pace of technological progress, including further progress in AI.

Over a short period (less than 5 years), this feedback loop could cause technological progress to become more than an order of magnitude faster.

How likely do you find this argument to be broadly correct?

Of the 299 responses to this question,

- 9% said “Quite likely (81-100%)”

- 20% said “Likely (61-80%)”

- 24% said “About even chance (41-60%)”

- 24% said “Unlikely (21-40%)”

- 23% said “Quite unlikely (0-20%)”

Probability of dramatic technological speedup

Assume that HLMI will exist at some point.

How likely do you then think it is that the rate of global technological improvement will dramatically increase (e.g. by a factor of ten) as a result of machine intelligence:

Within two years of that point? _% chance

Within thirty years of that point? _% chance

There were 298 responses to this question. The median answer was 20% for two years and 80% for thirty years.

Probability of superintelligence

Assume that HLMI will exist at some point.

How likely do you think it is that there will be machine intelligence that is vastly better than humans at all professions (i.e. that is vastly more capable or vastly cheaper):

Within two years of that point? _% chance

Within thirty years of that point? _% chance

There were 282 responses to this question. The median answer was 10% for two years and 60% for thirty years.

AI Interpretability in 2028

For typical state-of-the-art AI systems in 2028, do you think it will be possible for users to know the true reasons for systems making a particular choice? By “true reasons” we mean the AI correctly explains its internal decision-making process in a way humans can understand. By “true reasons” we do not mean the decision itself is correct.

Of the 912 responses to this question,

- 5% said “Very unlikely (<10%)”

- 35% said “Unlikely (10-40%)”

- 20% said “Even odds (40-60%)”

- 15% said “Likely (60-90%)”

- 5% said “Very likely (>90%)”

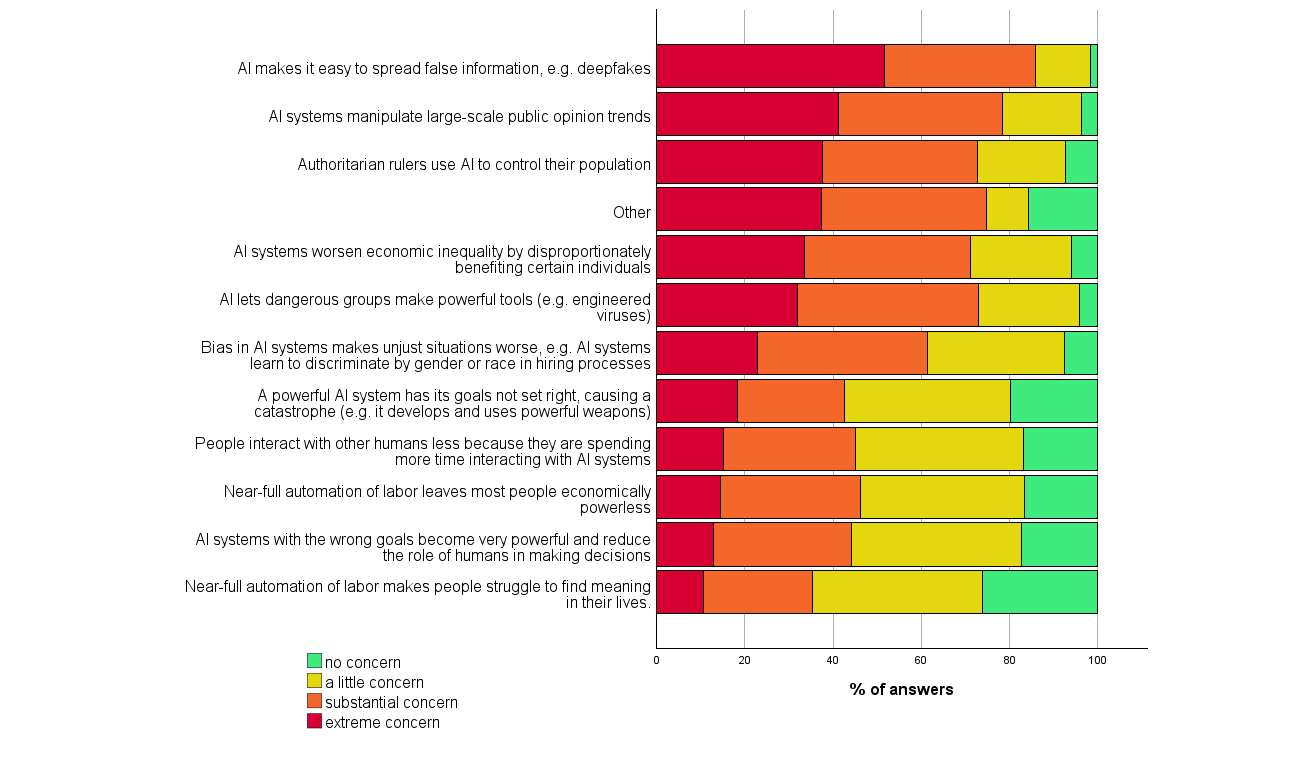

How concerning are 11 future AI-related scenarios?

1345 participants rated their level of concern for 11 AI-related scenarios over the next thirty years. As measured by the percentage of respondents who thought a scenario constituted either a “substantial” or “extreme” concern, the scenarios worthy of most concern were: spread of false information e.g. deepfakes (86%), manipulation of large-scale public opinion trends (79%), AI letting dangerous groups make powerful tools (e.g. engineered viruses) (73%), authoritarian rulers using AI to control their populations (73%), and AI systems worsening economic inequality by disproportionately benefiting certain individuals (71%).

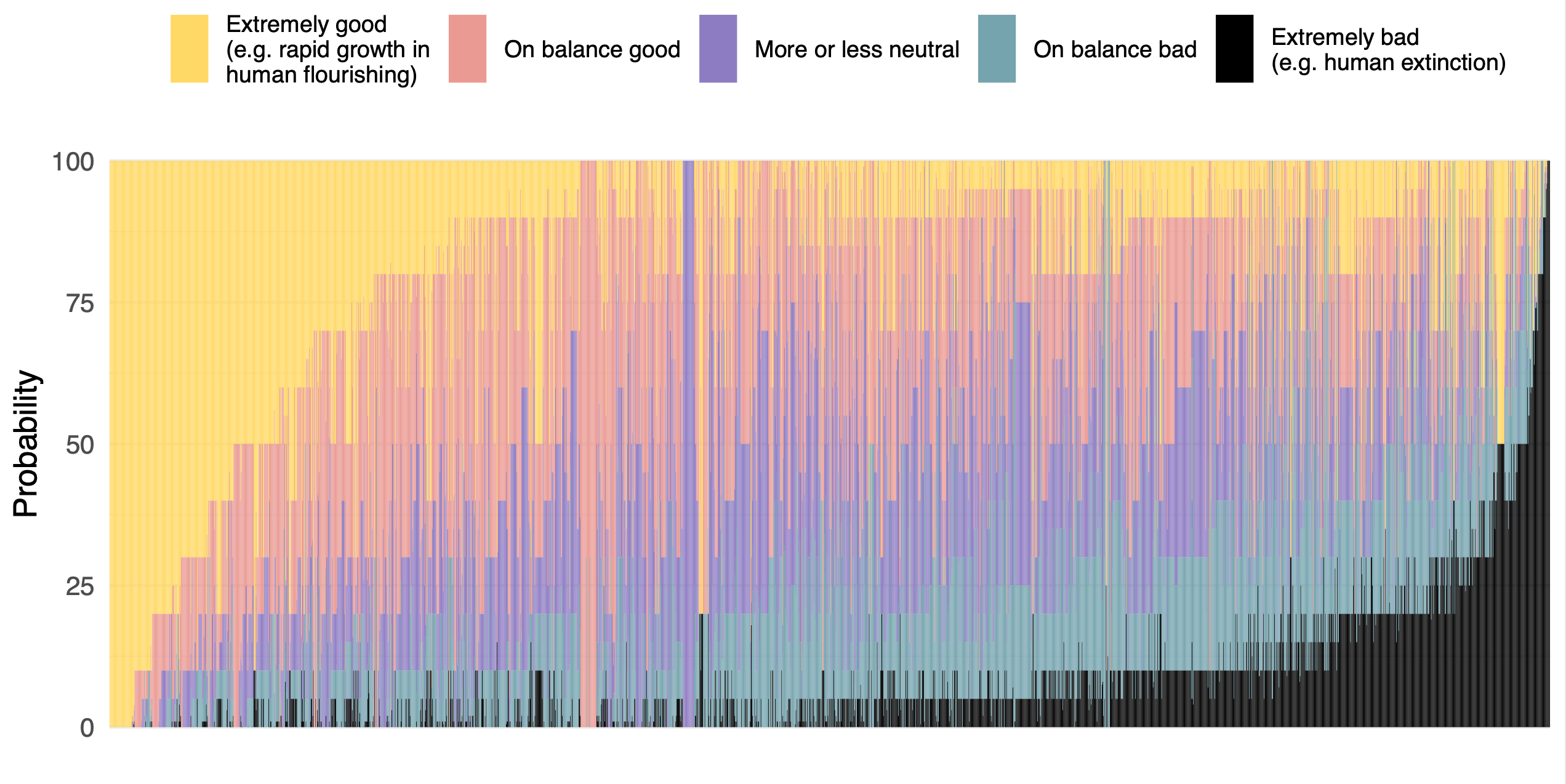

Overall impact of HLMI

Assume for the purpose of this question that HLMI will at some point exist. How positive or negative do you expect the overall impact of this to be on humanity, in the long run?

Participants were asked for the probability they would give to different kinds of impact. Below are the medians and means of the 2704 responses:

| Overall impact of HLMI | Median response | Mean response |

|---|---|---|

| “Extremely good (e.g. rapid growth in human flourishing)” | 10% | 23% |

| “On balance good” | 25% | 29% |

| “More or less neutral” | 20% | 21% |

| “On balance bad” | 15% | 18% |

| “Extremely bad (e.g. human extinction)” | 5% | 9% |

Preferred rate of progress

What rate of global AI progress over the next five years would make you feel most optimistic for humanity's future? Assume any change in speed affects all projects equally.

Of the 675 responses to this question,

- 4.8% said “Much slower”

- 29.9% said “Somewhat slower”

- 26.9% said “Current speed”

- 22.8% said “Somewhat faster”

- 15.6% said “Much faster”

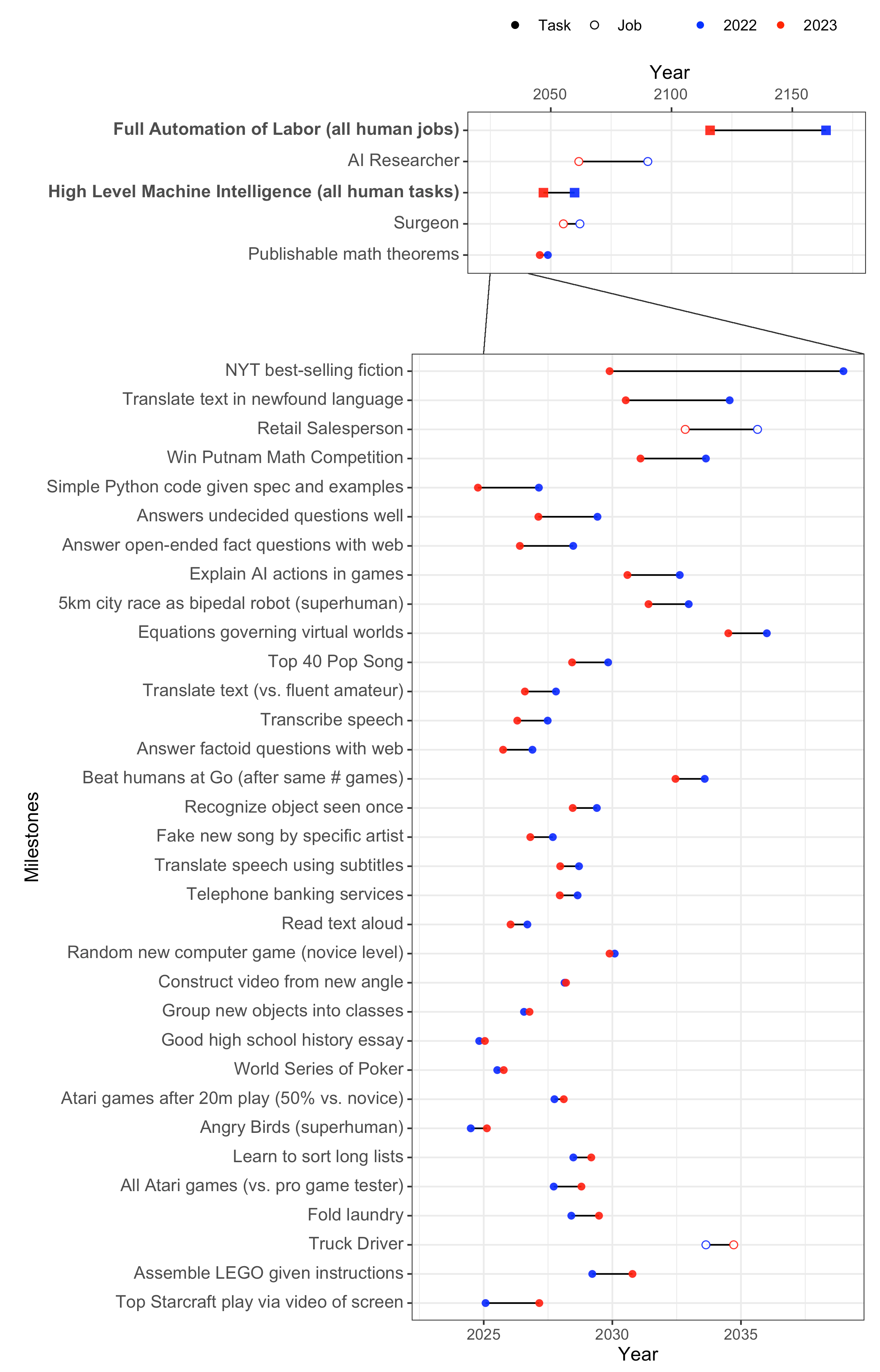

How soon will 39 tasks be feasible for AI?

Participants were asked about when each of 39 tasks would become “feasible” for AI, i.e. when “one of the best resourced labs could implement it in less than a year if they chose to. Ignore the question of whether they would choose to.” Each respondent was asked about four of the tasks, so each task received around 250 estimates.

As with the questions about timelines to human performance, participants were asked for three year-probability pairs with either the fixed-years or fixed-probabilities framing. Out of the 32 tasks which were also in the 2022 survey, for most of them the aggregate forecasts in this survey predicted them to be reached earlier than was predicted in the 2022 survey.

The alignment problem

Stuart Russell summarizes an argument for why highly advanced AI might pose a risk as follows:

The primary concern [with highly advanced AI] is not spooky emergent consciousness but simply the ability to make high-quality decisions. Here, quality refers to the expected outcome utility of actions taken […]. Now we have a problem:

1. The utility function may not be perfectly aligned with the values of the human race, which are (at best) very difficult to pin down.

2. Any sufficiently capable intelligent system will prefer to ensure its own continued existence and to acquire physical and computational resources – not for their own sake, but to succeed in its assigned task.

A system that is optimizing a function of n variables, where the objective depends on a subset of size k<n, will often set the remaining unconstrained variables to extreme values; if one of those unconstrained variables is actually something we care about, the solution found may be highly undesirable. This is essentially the old story of the genie in the lamp, or the sorcerer’s apprentice, or King Midas: you get exactly what you ask for, not what you want.

"Do you think this argument points at an important problem?"

Of the 1322 who answered this question,

- 5% said “No, not a real problem”

- 9% said “No, not an important problem”

- 32% said “Yes, a moderately important problem”

- 41% said “Yes, a very important problem”

- 13% said “Yes, among the most important problems in the field”

"How valuable is it to work on this problem today, compared to other problems in AI?"

Of the 1321 who answered this question,

- 9% said “Much more valuable”

- 22% said “More valuable”

- 39% said “As valuable”

- 22% said “Less valuable”

- 8% said “Much less valuable”

"How hard do you think this problem is compared to other problems in AI?"

Of the 1274 who answered this question,

- 21% said “Much harder”

- 36% said “Harder”

- 30% said “As hard”

- 10% said “Easier”

- 3% said “Much easier”

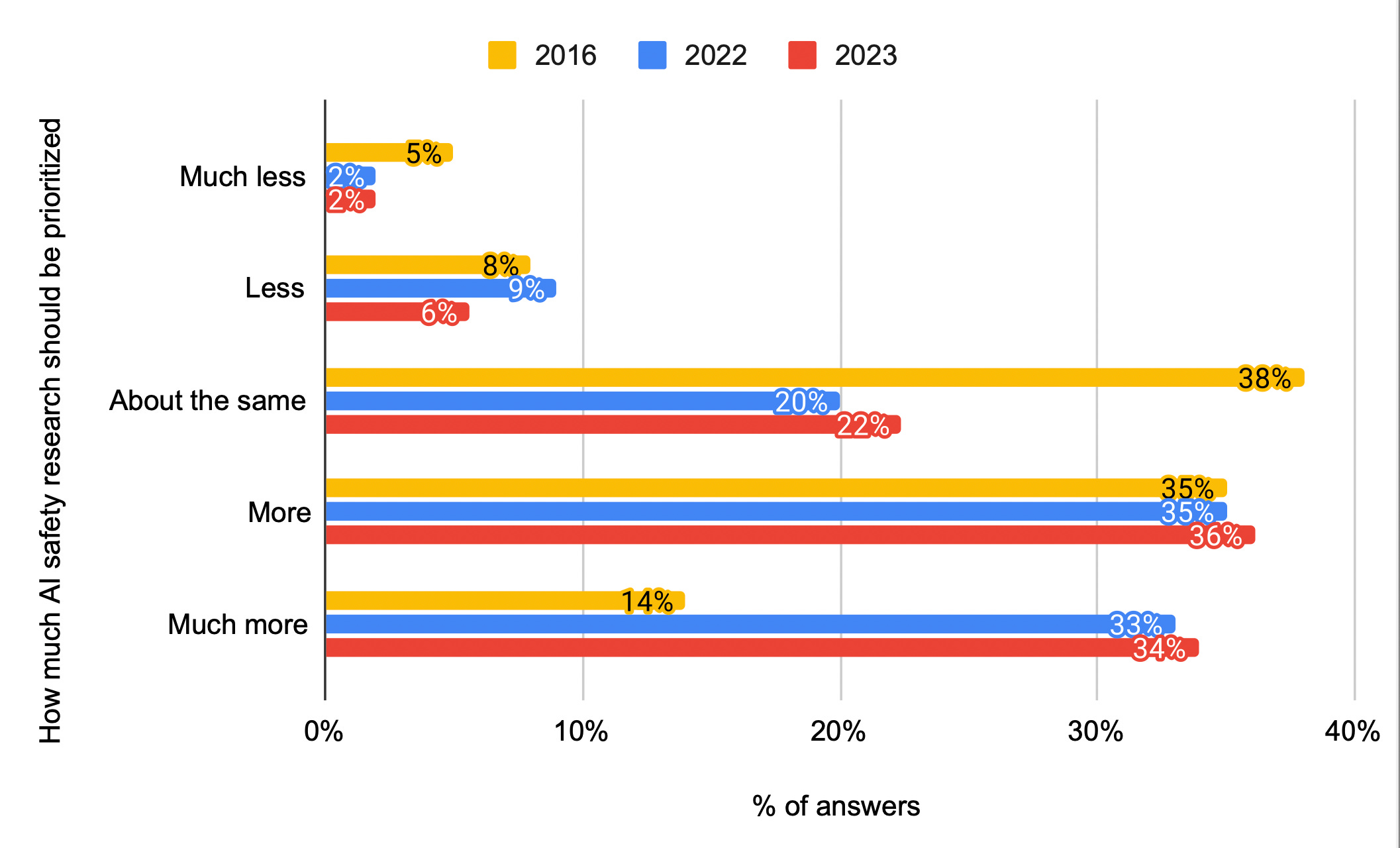

How much should society prioritize AI safety research?

Let 'AI safety research' include any AI-related research that, rather than being primarily aimed at improving the capabilities of AI systems, is instead primarily aimed at minimizing potential risks of AI systems (beyond what is already accomplished for those goals by increasing AI system capabilities).

Examples of AI safety research might include:

* Improving the human-interpretability of machine learning algorithms for the purpose of improving the safety and robustness of AI systems, not focused on improving AI capabilities

* Research on long-term existential risks from AI systems

* AI-specific formal verification research

* Developing methodologies to identify, measure, and mitigate biases in AI models to ensure fair and ethical decision-making.

* Policy research about how to maximize the public benefits of AI

How much should society prioritize AI safety research, relative to

how much it is currently prioritized?

Of the 1329 responses to this question,

- 2% said “Much less”

- 6% said “Less”

- 22% said “About the same”

- 36% said “More”

- 34% said “Much more”

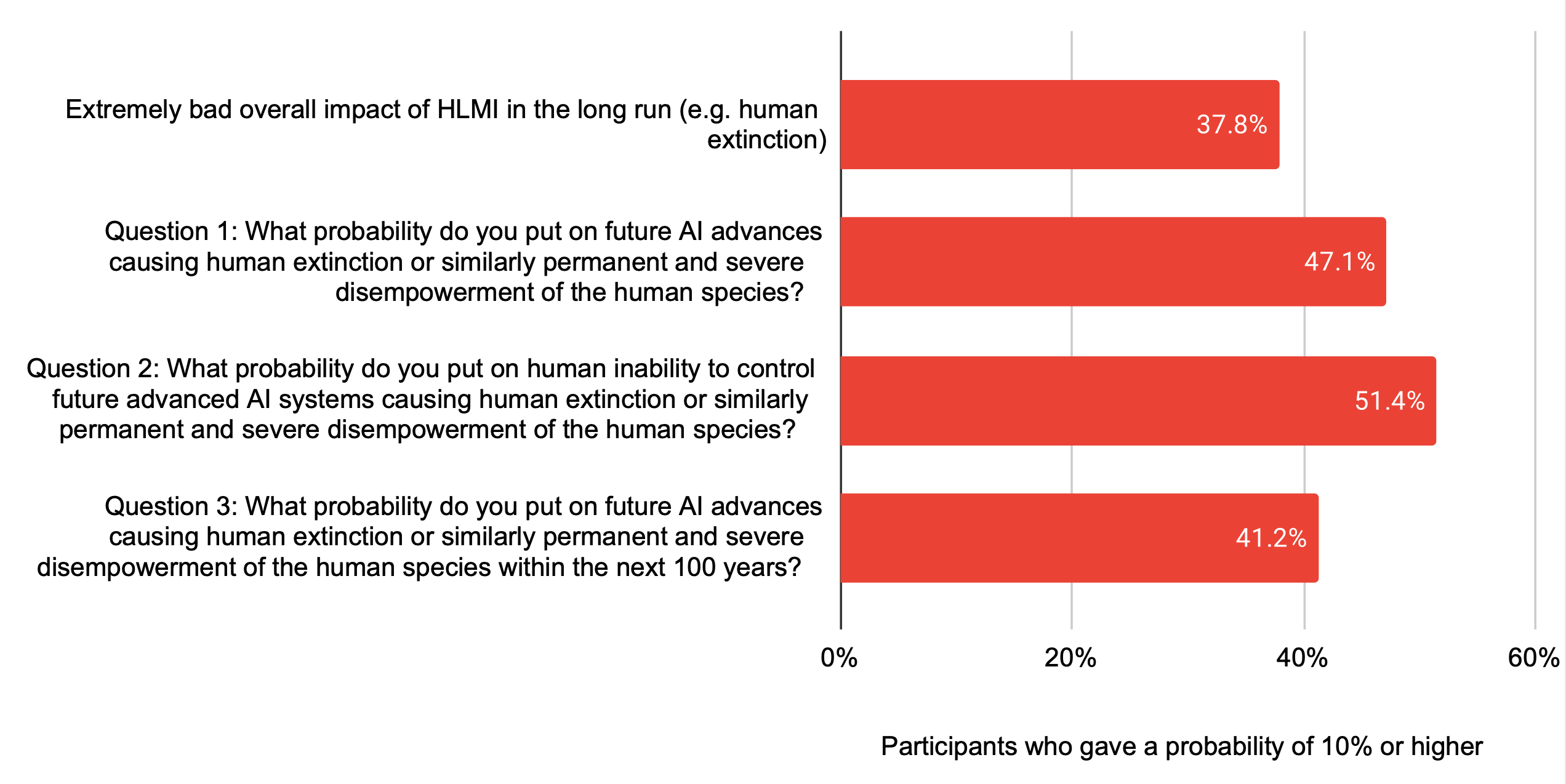

Human extinction

We asked about the likelihood that AI will cause human extinction using three differently phrased questions, for which we collected 655-1321 responses each.

| Question phrasing | Median response | Mean response |

|---|---|---|

| “What probability do you put on future AI advances causing human extinction or similarly permanent and severe disempowerment of the human species?” | 5% | 16.2% |

| “What probability do you put on human inability to control future advanced AI systems causing human extinction or similarly permanent and severe disempowerment of the human species?” | 10% | 19.4% |

| “What probability do you put on future AI advances causing human extinction or similarly permanent and severe disempowerment of the human species within the next 100 years?” | 5% | 14.4% |

Frequently asked questions

How does the seniority of the participants affect the results?

One reasonable concern about an expert survey might be that more senior experts are busier and less likely to participate in a survey. We found that authors with over 1000 citations were 69% as likely to participate in the survey as the base rate among those we contacted. We found that differences in seniority had little effect on opinions about the likelihood that HLMI would lead to impacts that were “extremely bad (e.g. human extinction).” (see table below)

| Group | % who gave at least 5% odds to “extremely bad (e.g. human extinction)” impacts from HLMI |

|---|---|

| All participants | 57.80% |

| Has 100+ citations | 62.30% |

| Has 1000+ citations | 59.00% |

| Has 10,000+ citations | 56.30% |

| Started PhD by 2018 | 58.80% |

| Started PhD by 2013 | 58.50% |

| Started PhD by 2003 | 54.70% |

| In current field 5+ years | 54.40% |

| In current field 10+ years | 51.40% |

| In current field 20+ years | 48.00% |

Contributions

Authors of the 2023 Expert Survey on Progress in AI are Katja Grace, Julia Fabienne Sandkühler, Harlan Stewart, Stephen Thomas, Ben Weinstein-Raun, and Jan Brauner.

Many thanks for help with this research to Rebecca Ward-Diorio, Jeffrey Heninger, John Salvatier, Nate Silver, Joseph Carlsmith, Justis Mills, Will Macaskill, Zach Stein-Perlman, Shakeel Hashim, Mike Levine, Lucius Caviola, Eli Rose, Max Tegmark, Jaan Tallinn, Shahar Avin, Daniel Filan, David Krueger, Nathan Young, Michelle Hutchinson, Arden Koehler, Nuño Sempere, Naomi Saphra, Soren Mindermann, Dan Hendrycks, Alex Tamkin, Vael Gates, Yonadav Shavit, James Aung, Jacob Hilton, Ryan Greenblatt, and Frederic Arnold.