Table of Contents

The range of human intelligence

Published 18 January, 2015; last updated 10 December, 2020

The range of human intelligence seems large relative to the space below it, as measured by performance on tasks we care about—despite the fact that human brains are extremely similar to each other.

Without knowing more about the sources of variation in human performance, however, we cannot conclude much at all about the likely pace of progress in AI: we are likely to observe significant variation regardless of any underlying facts about the nature of intelligence.

Details

Measures of interest

Performance

IQ is one measure of cognitive performance. Chess ELO is a narrower one. We do not have a general measure that is meaningful across the space of possible minds. However when people speak of ‘superhuman intelligence’ and the intelligence of animals they imagine that these can be meaningfully placed on some rough spectrum. When we say ‘performance’ we mean this kind of intuitive spectrum.

Development effort

We are especially interested in measuring intelligence by the difficulty of building a machine which exhibits that level of intelligence. We will not use a formal unit to measure this distance, but are interested in comparing the range between humans to distances between other milestones, such as that between a mouse and a human, or a rock and a mouse.

Variation in cognitive performance

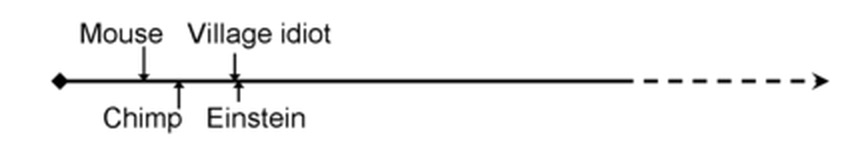

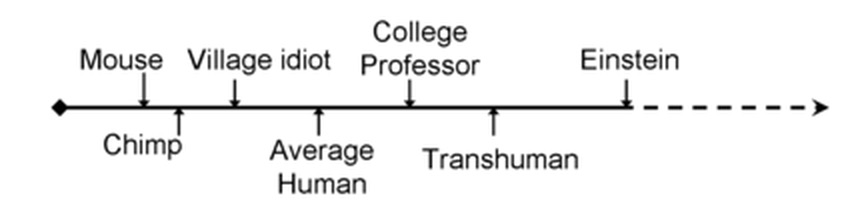

It is sometimes argued that humans occupy a very narrow band in the spectrum of cognitive performance. For instance, Eliezer Yudkowsky defends this rough schemata1—

—over these, which he attributes to others:

Such arguments sometimes go further, to suggest that AI development effort needed to traverse the distance from the ‘village idiot’ to Einstein is also small, and so given that it seems so large to us, AI progress at around human level will seem very fast.

The landscape of performance is not easy to parameterize well, as there are many cognitive tasks and dimensions of cognitive ability, and no good global metric for comparison across different organisms. Nonetheless, we offer several pieces of evidence to suggest that the human range is substantial, relative to the space below it. We do not approach the topic here of how far above human level the space of possible intelligence reaches.

Low human performance on specific tasks

For most tasks, human performance reaches all of the way to the bottom of the possible spectrum. At the extreme, some comatose humans will fail at almost any cognitive task. Our impression is that people who are completely unable to perform a task are not usually isolated outliers, but that there is a distribution of people spread across the range from completely incapacitated to world-champion level. That is, for a task like ‘recognize a cat’, there are people who can only do slightly better than if they were comatose.

For our purposes we are more interested in where normal human cognitive performance fall relative to the worst and best possible performance, and the best human performance.

Mediocre human performance relative to high human performance

On many tasks, it seems likely that the best humans are many times better than mediocre humans, using relatively objective measures.2

Shockley (1957) found that in science, the productivity of the top researchers in a laboratory was often at least ten times as great as the least productive (and most numerous) researchers. Programmers purportedly vary by an order of magnitude in productivity, though this is debated. A third of people scored nothing in this Putnam competition, while someone scored 100. Some people have to work ten times harder to pass their high school classes than others.

Note that these differences are among people skilled enough to actually be in the relevant field, which in most cases suggests they are above average. Our impression is that something similar is true in other areas such as sales, entrepreneurship, crafts, and writing, but we have not seen data on them.

These large multipliers on performance at cognitive tasks suggest that the range between mediocre cognitive ability and genius is many times larger than the range below mediocre cognitive ability. However it is not clear that such differences are common, or to what extent they are due to differences in underlying general cognitive ability, rather than learning or non-cognitive skills, or a range of different cognitive skills that aren’t well correlated.

Human performance spans a wide range in other areas

In qualities other than intelligence, humans appear to span a fairly wide range below their peak levels. For instance, the fastest human runners are multiple times faster than mediocre runners (twice as fast at a 100m sprint, four times as fast for a mile). Humans can vary in height by a factor of about four, and commonly do by a factor of about 1.5. The most accurate painters are hard to distinguish from photographs, while some painters are arguably hard to distinguish from monkeys, which are very easy to distinguish from photographs. These observations weakly suggest that the default expectation should be for humans to span a wide absolute range in cognitive performance also.

AI performance on human tasks

In domains where we have observed human-level performance in machines, we have seen rather gradual improvement across the range of human abilities. Here are five relevant cases that we know of:

1. Chess (See Time for AI to cross the human performance range in chess): human chess Elo ratings conservatively range from around 800 (beginner) to 2800 (world champion). The following figure illustrates how it took chess AI roughly forty years to move incrementally from 1300 to 2800.

2. Go (See Time for AI to cross the human performance range in Go): Human go ratings range from 30-20 kyu (beginner) to at least 9p (10p is a special title). Note that the numbers go downwards through kyu levels, then upward through dan levels, then upward through p(rofessional dan) levels. The following figure suggests that it took around 25 years for AI to cover most of this space (the top ratings seem to be closer together than the lower ones, though there are apparently multiple systems which vary).

3. StarCraft (See Time for AI to cross the human range in StarCraft): Beginner level StarCraft-playing AI seems to have been possible since the game was released in 1998. In 2018, AlphaStar beat a professional StarCraft player, suggesting that state-of-the-art StarCraft-playing AI had reached professional level. No AI seems to have above superhuman skills in StarCraft.

4. Checkers (See Time for AI to cross the human range in English draughts): According to Wikipedia’s timeline of AI, a program was written in 1952 that could challenge a respectable amateur. In 1994 Chinook beat the second highest rated player ever. (In 2007 checkers was solved.) Thus it took around forty years to pass from amateur to world-class checkers-playing. We know nothing about whether intermediate progress was incremental however.

5. Diagnosing diabetic retinopathy (See Time for AI to cross the human performance range in diabetic retinopathy)): Diabetic retinopathy, a complication of diabetes in which high blood sugar levels damage the back of the eye, is diagnosed by examining images of the back of the eye. The first algorithm for detecting diabetic retinopathy, developed in 1996, could not detect it as well as ophthalmologists. In 2016, Google released results for algorithms that could detect diabetic retinopathy about as well as ophthalmologists.

6. Image classification (See Time for AI to cross the human performance range in ImageNet image classification): The first image classifiers were developed in 1998. ImageNet is a large collection of images organized into a hierarchy of noun categories, released in 2009. Our beginner level benchmark for ImageNet classification was first surpassed in 2012, by AlexNet. In 2015, an image classifier surpassed our high human benchmark, suggesting that state-of-the-art AI could classify images about as well as expert humans.

7. Physical manipulation: we have not investigated this much, but our impression is that robots are somewhere in the the fumbling and slow part of the human spectrum on some tasks, and that nobody expects them to reach the ‘normal human abilities’ part any time soon (Aaron Dollar estimates robotic grasping manipulation in general is less than one percent of the way to human level from where it was 20 years ago).

8. Jeopardy: AI appears to have taken two or three years to move from lower ‘champion’ level to surpassing world champion level (see figure 9; Watson beat Ken Jennings in 2011). We don’t know how far ‘champion’ level is from the level of a beginner, but would be surprised if it were less than four times the distance traversed here, given the situation in other games, suggesting a minimum of a decade for crossing the human spectrum.

In all of these narrow skills, moving AI from low-level human performance to top-level human performance appears to take on the order of decades. This further undermines the claim that the range of human abilities constitutes a narrow band within the range of possible AI capabilities, though we may expect general intelligence to behave differently, for example due to smaller training effects.

On the other hand, most of the examples here—and in particular the ones that we know the most about—are board games, so this phenomenon may be less usual elsewhere. We have not investigated areas such as Texas hold ’em, arithmetic or constraint satisfaction sufficiently to add them to this list.

What can we infer from human variation?

The brains of humans are nearly identical, by comparison to the brains of other animals or to other possible brains that could exist. This might suggest that the engineering effort required to move across the human range of intelligences is quite small, compared to the engineering effort required to move from very sub-human to human-level intelligence (e.g. see p21 and 29, p70). The similarity of human brains also suggest that the range of human intelligence is smaller than it seems, and its apparent breadth is due to anthropocentrism (see the same sources). According to these views, board games are an exceptional case–for most problems, it will not take AI very long to close the gap between “mediocre human” and “excellent human.”

However, we should not be surprised to find meaningful variation in the cognitive performance regardless of the difficulty of improving the human brain. This makes it difficult to infer much from the observed variations.

Why should we not be surprised? De novo deleterious mutations are introduced into the genome with each generation, and the prevalence of such mutations is determined by the balance of mutation rates and negative selection. If de novo mutations significantly impact cognitive performance, then there must necessarily be significant selection for higher intelligence–and hence behaviorally relevant differences in intelligence. This balance is determined entirely by the mutation rate, the strength of selection for intelligence, and the negative impact of the average mutation.

You can often make a machine worse by breaking a random piece, but this does not mean that the machine was easy to design or that you can make the machine better by adding a random piece. Similarly, levels of variation of cognitive performance in humans may tell us very little about the difficulty of making a human-level intelligence smarter.

In the extreme case, we can observe that brain-dead humans often have very similar cognitive architectures. But this does not mean that it is easy to start from an AI at the level of a dead human and reach one at the level of a living human.

Because we should not be surprised to see significant variation–independent of the underlying facts about intelligence–we cannot infer very much from this variation. The strength of our conclusions are limited by the extent of our possible surprise.

By better understanding the sources of variation in human performance we may be able to make stronger conclusions. For example, if human intelligence is improving rapidly due to the introduction of new architectural improvements to the brain, this suggests that discovering architectural improvements is not too difficult. If we discover that spending more energy on thinking makes humans substantially smarter, this suggests that scaling up intelligences leads to large performance changes. And so on. Existing research in biology addresses the role of deleterious mutations, and depending on the results this literature could be used to draw meaningful inferences.

These considerations also suggest that brain similarity can’t tell us much about the “true” range of human performance. This isn’t too surprising, in light of the analogy with other domains. For example, although the bodies of different runners have nearly identical designs, the worst runners are not nearly as good as the best.

This background rate of human-range crossing is less informative about the future in scenarios where the increasing machine performance of interest is coming about in a substantially different way from how it came about in the past. For instance, it is sometimes hypothesized that major performance improvements will come from fast ‘recursive self improvement’, in which case the characteristic time scale might be much faster. However the scale of the human performance range (and time to cross it) relative to the area below the human range should still be informative.

- “My Childhood Role Model – LessWrong 2.0.” Accessed June 3, 2020. https://www.lesswrong.com/posts/3Jpchgy53D2gB5qdk/my-childhood-role-model.